Summary: The US Department of Health and Human Services (HHS) conducts a survey of patient experiences, the HCAHPS Hospital Quality Survey. The survey results are used to determine, in part, reimbursement by government to specific hospitals based upon the quality of care. But does the survey truly measure hospital quality? This article examines some of the administration biases and instrumentation biases that are present in the survey — to my surprise. In fact, the most important question has the most serious design shortcomings.

~ ~ ~

Important Financial Role of the HCAHPS Survey

The front page article of the Wall Street Journal on October 15, 2012 reported on the role of a patient satisfaction survey used by the US Department of Health and Human Services (HHS) to measure hospital quality — “U.S. Ties Hospital Payments to Making Patients Happy.” This is not just a “nice to know” survey. The results are applied in a formula that determines the amount of reimbursement that hospitals get from HHS.

In fact, the survey results comprise 30% of the evaluation. Not too surprisingly, hospitals whose reimbursements have been negatively impacted by the survey have questioned the appropriateness of using patient feedback to determine reimbursements. They have also questioned the methodology of the survey.

Given its impact, HHS treats the survey methodology with some care. Online, I found academic articles discussing the adjustments made to the results based upon the mode of survey administration — phone vs. web form. But upon looking at the survey process itself, biases from the instrument and the administration process abound.

Administration Biases in HCAHPS Survey

The survey may be conducted by vendors either by postal mail or by telephone. Let’s consider the hardcopy, postal mail survey.

- Recall bias. An postal mail invitation is sent some time after the hospital stay with a follow-up reminder note 3 weeks later. That’s a long time lag that results in recall bias, which can be thought of as simple forgetfulness. Even those who respond initially to a postal mail survey will have some recall bias, but those who respond based on the reminder note will have serious recall bias. It’s very likely that impressions of a hospital stay will change over time. Were adjustments made for when responded? I didn’t see that.

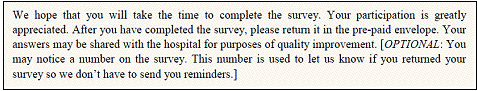

- Lack of anonymity. Curiously, the cover letter contains no statement of anonymity. Indirectly, they do tell respondents that their submissions are not anonymous. The survey, and optionally the cover letter, states: “You may notice a number on the survey. This number is used to let us know if you returned your survey so we don’t have to send you reminders.”I say “optionally” because the vendors approved to conduct the survey on behalf of hospitals apparently have some leeway in what is included in the cover letter. That fact struck me as odd since it affects the respondent’s mental frame when approaching the survey, biasing the results yet again.

- Lack of confidentiality. Most surprising to me, there’s no statement of confidentiality. We are told, “Your participation is voluntary and will not affect your health benefits” and “Your answers may be shared with the hospital for purposes of quality improvement.”Those are pretty thin statements of confidentiality. Who will get access to individual submissions? This is never fully spelled out, nor whether “your answers” that are “shared with the hospital” will include the respondent’s — the patient’s! — name. Yikes!

In the age of HIPAA (Health Information Portability and Accountability Act), I found this most odd. I would complete this survey due to the response bias created by this lack of confidentiality. While my “participation” may “not affect [my] health benefit,” it might affect how the hospital and staff treat me the next time I’m at the hospital !!

Consider the rather vulnerable position in which patients find themselves at a hospital. Basically, you’re being asked to be a whistleblower — assuming you have negative comments — with no protection. Granted, it might be far-fetched to think that a hospital employee who got called out from a survey would mistreat the patient who gave a bad review, but if you’re in a vulnerable health situation, would you take the chance?

This response bias may lead people to not participate, and it may also color how they respond if they do complete the survey — a measurement error.

Instrumentation Biases in HCAHPS Survey

Now let’s turn to the instrumentation biases in the survey instrument itself.

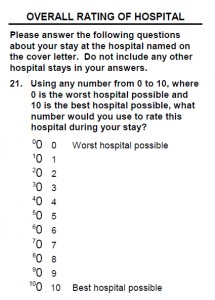

The most puzzling question to me is the question that probably gets most used by HHS — Question 21. The last section for feedback is about your “Overall Rating of Hospital.” The instructions are quite explicit.

Do not include any other hospital stays in your answer.

As a transactional survey, I understand the need to have the respondent focused on this one event. But Question 21 then asks for a comparative answer.

Using a number from 0 to 10, where 0 is the worst hospital possible and 10 is the best hospital possible, what number would you use to rate this hospital during your stay.

Please tell me how you can answer that question without thinking about “other hospital stays.” You can’t. The question is asking for a comparison, and the instructions tell you that you shouldn’t compare!

I am frankly stunned that this type of wording error could be in a survey question that is so critical to so many organizations in our nation.

Further, there’s no option to indicate you cannot make a comparison, while other less critical questions allow the respondent to indicate the question is not appropriate or applicable to them.

Consider my situation. I have not had an overnight hospital stay since I had my tonsils out when I was 5 — many, many decades ago. I have been fortunate that any medical issues have only required outpatient procedures.

What if I then required a hospital stay was asked to take this survey? How do I answer the comparative question? I can’t without simply fabricating a benchmark based on general perceptions. How valid are my data? How would you like to be a hospital president whose reimbursement is reduced based upon such data?

Even if I had had other hospitals stays, wouldn’t it be analytically useful to know how many stays I’ve had in the past X years that form my comparison group? In fact, shouldn’t the comparison group be hospital stays in the past few years? (Maybe they have all that data in some database on all of us — a scary thought, but not out of the question in these days of “command and control” central authorities.)

For a question that is so critical to the overall assessment, it has serious shortcomings.

The survey instrument has other design aspects that don’t thrill me.

- Most all the questions are posed on a frequency scale ranging from Never to Always. Use of this scale presumes that the characteristic being measured happens — or could have happened — numerous times.

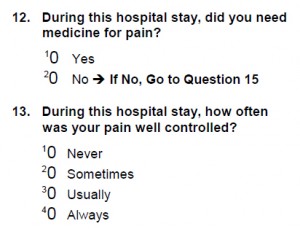

Questions 12 to 14 ask about controlling pain with medication. Question 13 again uses the “how often” phrasing — “how often was your pain well controlled?” implying pain is a discrete event, not a continuous event. Question 14 asks whether “the hospital staff [did] everything they could to help you with your pain?” Yet, as noted in the Wall Street Journal article, proper pain management does not mean providing any and all medication to relieve pain. The question conflicts with proper medical practice, which is of particular concern in the age of opioid abuse.

Questions 12 to 14 ask about controlling pain with medication. Question 13 again uses the “how often” phrasing — “how often was your pain well controlled?” implying pain is a discrete event, not a continuous event. Question 14 asks whether “the hospital staff [did] everything they could to help you with your pain?” Yet, as noted in the Wall Street Journal article, proper pain management does not mean providing any and all medication to relieve pain. The question conflicts with proper medical practice, which is of particular concern in the age of opioid abuse.- Questions 1 and 5 ask if the nurses and doctors, respectively, treated you “with courtesy and respect.” Those two attributes are very similar, but there is a difference, which is somewhat rooted in the patient’s cultural background. To some extent this is a double-barreled question, which clouds respondent’s the ability to answer the question and the analyst’s ability to interpret the results.

Question 4 asks, “after you pressed the call button, how often did you get help as soon as you wanted it?” (my emphasis) First, the question assumes that everyone knows what the “call button” is, which is probably safe, but “call button” is hospital jargon. We should avoid our industry jargon in surveys since they can introduce ambiguity.More importantly, there’s an implication in the wording that the call button system has the ability to differentiate the priority of the patient’s issue. Every call has to be answered as if it’s critical, but less critical calls could impede the ability to respond to more critical ones.They could have asked for a quasi-objective answer — “after you pressed the call button, how long did it take for someone to respond,” recognizing that people always over estimate wait times, especially in urgent situations. But instead they have introduced real subjectivity with the phrasing.

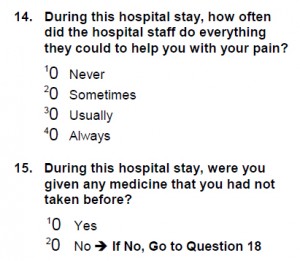

Question 4 asks, “after you pressed the call button, how often did you get help as soon as you wanted it?” (my emphasis) First, the question assumes that everyone knows what the “call button” is, which is probably safe, but “call button” is hospital jargon. We should avoid our industry jargon in surveys since they can introduce ambiguity.More importantly, there’s an implication in the wording that the call button system has the ability to differentiate the priority of the patient’s issue. Every call has to be answered as if it’s critical, but less critical calls could impede the ability to respond to more critical ones.They could have asked for a quasi-objective answer — “after you pressed the call button, how long did it take for someone to respond,” recognizing that people always over estimate wait times, especially in urgent situations. But instead they have introduced real subjectivity with the phrasing.- Question 15 asks if you were “given any medicine that you had not taken before?” The response options are Yes and No. The missing option is Not Sure. Nor does the question ask how many new medicines were taken. This distinction is important for Question 16 which poses on that frequency scale, “how often did hospital staff tell you what the medicine was for.” What if you took only one new medicine? The response then has to be either Never or Always. Again, for analytical purposes the count of new medicines in important.

- Question 8 asks, “how often were your room and bathroom kept clean?” The use of the lead-in phrase “how often” implies discrete events. “how often were your room and bathroom cleaned?” is more appropriate phrasing for the scale used.

Albeit, some of these are minor issues, but many are not. In such an important survey, shouldn’t the instrument have as clean a design as HHS expects of patients’ “rooms and bathrooms”?

~ ~ ~

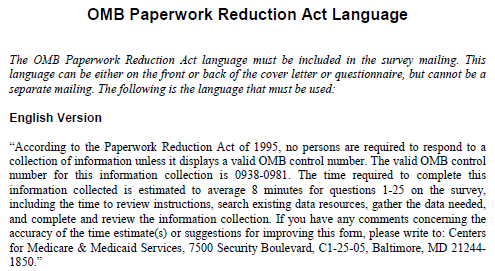

Finally, in a delightful bit of bureaucratic irony, the survey must include a long paragraph to fulfill the requirements of the “OMB Paperwork Reduction Act of 1995”. This verbiage may require the printing of an extra page, increasing the paperwork!

In fact, the designers of the survey violated the goals of this Act with their choice of instrument layout, which increases the physical length of the survey. Had the designers used a “table” format, somewhat as HHS did here in describing the survey, the survey’s physical length would have easily been cut in half or more and the survey could have been completed more quickly. Now that’s paperwork reduction.

In fact, the designers of the survey violated the goals of this Act with their choice of instrument layout, which increases the physical length of the survey. Had the designers used a “table” format, somewhat as HHS did here in describing the survey, the survey’s physical length would have easily been cut in half or more and the survey could have been completed more quickly. Now that’s paperwork reduction.

But more importantly to the validity of the survey results, isn’t the language of this required statement a bit intimidating? This “collection of information” instrument must have a “valid OMB control number.” If you’re unfamiliar with OMB and bureaucratic language, this phrasing could scare people off from taking the survey. I’d be curious to know if the impact of the statement upon the survey responses has been tested.

Oh, yes. “What’s OMB?” you ask. Office of Management and Budget, of course. The acronym is never written out for the respondents, introducing yet more ambiguity, and maybe intimidation. Apparently, we’re all supposed to know the government’s acronym dictionary.

How silly. How presumptuous. How damaging to data validity.