University Help Desk Survey Creation

I had the opportunity to talk with Joyce Sandusky of Clayton State University, just south of Atlanta, about the survey program she helped design for Clayton’s technical support center. From previous conversations with her, I knew she had invested a lot of time, energy, and thought into the program. So, I thought her experiences and lessons could benefit others, whether you’re going to create a survey for a university IT help desk or whether you’re going to conduct a corporate help desk survey.

Joyce, thanks for taking the time to chat. First, briefly describe the Clayton help desk survey program.

“The HUB” is the name for our help desk. It supports all computers on campus – laptops, desktops, telephones, etc. Last December [2006], we surveyed staff, faculty, and students to learn their feeling about our quality of service.

What was the impetus for creating the program?

After going to your seminar at a Help Desk Institute conference, I was motivated to do a better survey for our support center staff. We have been in operation for 10 years, and five years ago we did a very crude, 10-question survey, sending out a survey invitation to every 5th ticket at closure. We didn’t realize it at the time, but it was a very biased survey since it didn’t give respondents a chance to say they were unhappy. So, we went around telling everybody that we had a 97% approval rating. Later we realized that the survey was biased. We knew we needed to do a better survey if we were going to learn how well our help desk was performing.

So how did you proceed to create a survey program?

When I was out on a medical leave for a month last summer [2006], I bought and read through your customer survey book. When we were creating our own customer loyalty program, we used the book as our instruction manual. It was very easy to understand and follow. And I felt like we did a lot better with it as a guide than if we did it on our own. You’d think I was a stalker the number of times I read your book.

We knew this first survey would be rough, and I tried to follow the book as much as we could to be sure we were doing it right. We decided to do an annual survey. It has turned out better than I thought it would.

We started in July 2006 and created a customer loyalty team, composed of faculty, staff and students – though the student representative fizzled out. Our intention was to build a foundation for an ongoing program. We held an initial meeting with all of the technical support specialists to introduce the customer loyalty program.We then held a meeting of the full customer loyalty team, which included representation from each of our customer bases, (i.e., faculty, staff, and student.)

Within that team we had a survey questionnaire design team focus on creating the survey instrument. From your book I knew it would take a lot of time, and we proved it! This team conducted focus groups of the different groups we serve. After the focus groups, the team met to analyze the focus group data. They identified the attributes of our help desk service that left an impression.

In the staff focus group we asked the participants to tell us about an experience they had had with the HUB. We learned that the staff didn’t know how to contact us and that their idea of an emergency was different than our idea of an emergency. We realized that we needed to ask how well they perceived that we responded to their issues. That focus group discussion revealed that we needed a survey question about how easy it is to work with our procedures.

The focus group ultimately told us that we needed to survey three areas. One was policies and procedures. Another was speed of problem resolution. The third was courtesy of staff. We had one question on the survey for each of those areas.

Since we had three different groups of customers, we tailored the survey to each group, asking different questions of faculty, staff, and students.

So, you set up some branching logic once you identified the type of respondent?

Yes, however, the soft skill questions on the survey were the same for all three groups. For example, “Did we show concern for your time?”

Once you completed the survey questionnaire, how did you administer the survey?

Your book said you need a budget. We had no budget because we’re a university. However, we were able to use university resources including a programmer and I was allowed to dedicate the majority of my time to the project. Unfortunately, doing it all in-house also led to our biggest problem. The first programmer had never done any type of survey, and he consumed most of our allotted time. Two weeks before we wanted to send out the survey, the programmer told us he couldn’t do it. We scrambled for a new in-house programmer, and we got a guy who was really sharp. He did a great, quick job for us. However, we ran into a problem with the branching questions and feel like we may have lost a lot of data from the technical questions on the survey.

We sent out 7200 email invitations and got back 292 responses. That included 74 open-ended comments on the 292 responses. Mostly these were positive comments.

But the timing [for sending out the invitations] was poor. We wanted to send it out over Thanksgiving week, but because of the programming problems, it didn’t go out until December 12. Classes had ended December 10. We know we needed to send it earlier than we did.

Also, our student body is about half non-traditional. 55% of respondents were 30 and above. It was mostly the non-traditional students who responded since they seemed to be ones who checked their email after the semester ended. We have a lot of services available to non-traditional students, which may explain why we got such positive results.

That’s an interesting example of an administration bias, resulting from the timing of the survey invitations and the broad nature of the respondent group.

We also got a low faculty response.

That’s probably because they were busy grading final exams. Did you provide any incentive?

We had a minor incentive. We gave the first 100 responses a free sandwich at Chick-fil-A, which they [Chick-fil-A] donated. Out of the 100 only about 40 took the sandwich.

That may have introduced an administration bias as well. If you don’t like their chicken, it’s not much of an incentive.

What did the survey results show?

The results did show that the big problem area was communication. For example did we explain how much time it would take to resolve the problem? We got high scores in most all soft skills, but not on communication.

We asked how many people contacted our website. It was about 80%. Over half the faculty had visited the self-help guides, but only 10% of students did. What we gleaned from this was that we needed to keep the self-help guides up to date for faculty, but we may not invest resources into self-help for student-related issues.

I went to a course in Minitab last fall, but I had to relearn it this spring when doing the data analysis. They have the best customer support; it’s incredible. In one case she actually did the analysis and sent it back to me. If I couldn’t figure out how to do it in Minitab, I did it in Excel. In hindsight, I might have just used Excel.

I wish I were more familiar with statistics, and I hope that every year we will increase in that. We had a business professor work with us on analyzing the results. He has written an article about the survey results that he hopes to get published in an educational journal.

Several times you’ve mentioned the effort to create the survey program. How much time did you personally spend on the survey design effort?

As I said, I started in June 2006 when I was on medical leave. I spent all June & July on this. I then spent 2 weeks every month full time for August to February. Then in March to mid May I spent 3 weeks each month doing the analysis and writing the report.

That is a lot of time! What’s next for the customer loyalty program?

We spent the first few months of this year [2007] analyzing the results. The team met in May to go over the results and are planning for our next survey for the fall of 2007. We started planning in July for a Thanksgiving rollout. This year it won’t take as long.

This fall one of the things we’re doing differently is that the university is looking into purchasing surveying software for faculty, and we’ll be using that tool. That’s a big thing that will be different. The university was insistent that we use a purchased software program and not use a hosted survey for security reasons.

What key lessons did you learn from this survey that our readers should know?

The most important lesson we learned is that we will allow more time. I thought we had allowed a lot of time, but we could have used more.

I wish we had had the money to pass the survey by someone like you. But until the surveys yield some benefit, our management is not likely to put money into the program.

That’s an interesting Catch-22 problem that many people confront. If management isn’t willing to invest in the survey, then they’ll never see the benefits. But if they don’t see benefits, then they won’t invest the necessary resources.

Any final thoughts?

The things I remember most from your book. First, create a budget. Second, allow enough time to do it right. Those proved to be so true for our program, and no one should underestimate the time to create a good survey.

Thanks for sharing, Joyce.

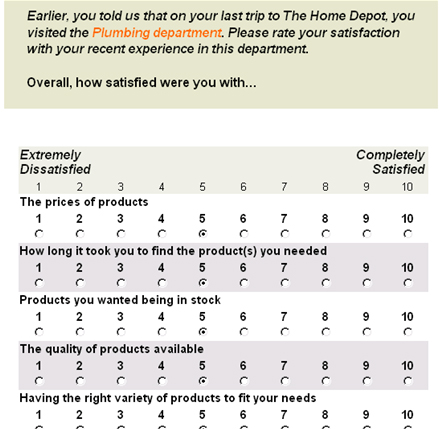

Second, the designers also make the survey feel longer by their survey design. They chose to use a 10-point scale. (See the example nearby.) Now when you think about the helpfulness of the associate in the plumbing department, can you really distinguish between a 6, 7, and 8 on the scale? Was it such an intense interaction that you could distinguish your feelings with that level of precision? Of course not. The precision of the instrument exceeds the precision of our cognition. This is like trying to state the temperature to an accuracy of 2 decimal points using a regular window thermometer! But the request for the precision lengthens the time for the respondent to choose an answer — with likely no legitimate information gain. People ask me, “how many points should a survey scale have? Isn’t more points better?” Not if the added respondent burden exceeds the information gain.

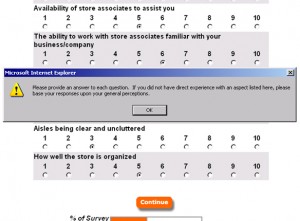

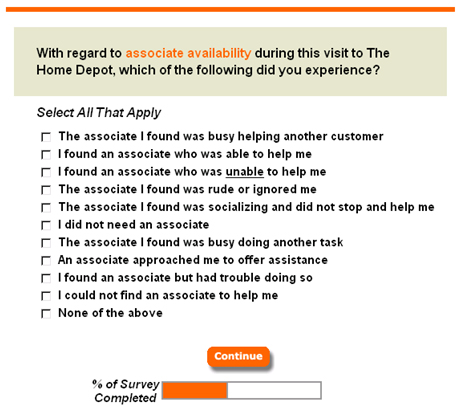

Second, the designers also make the survey feel longer by their survey design. They chose to use a 10-point scale. (See the example nearby.) Now when you think about the helpfulness of the associate in the plumbing department, can you really distinguish between a 6, 7, and 8 on the scale? Was it such an intense interaction that you could distinguish your feelings with that level of precision? Of course not. The precision of the instrument exceeds the precision of our cognition. This is like trying to state the temperature to an accuracy of 2 decimal points using a regular window thermometer! But the request for the precision lengthens the time for the respondent to choose an answer — with likely no legitimate information gain. People ask me, “how many points should a survey scale have? Isn’t more points better?” Not if the added respondent burden exceeds the information gain. Fourth, the survey designers explicitly want you to add garbage to their data set! A response is required on every question, grossly increasing respondent burden. Plus, some of the questions simply are not relevant to every shopping visit. Look at the example below for the error message you’ll receive if you leave an answer blank.

Fourth, the survey designers explicitly want you to add garbage to their data set! A response is required on every question, grossly increasing respondent burden. Plus, some of the questions simply are not relevant to every shopping visit. Look at the example below for the error message you’ll receive if you leave an answer blank.

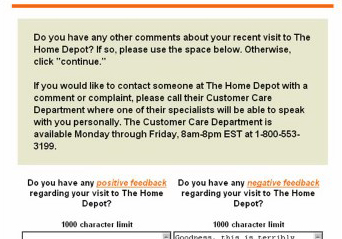

Towards the end it asks for comments. I gave some comments then asked if anyone actually reads these comments. I gave my email address, and asked for a reply, but no one ever replied. I figured I’d at least get a form reply. Do you think anyone actually reads the comments in surveys like these?

Towards the end it asks for comments. I gave some comments then asked if anyone actually reads these comments. I gave my email address, and asked for a reply, but no one ever replied. I figured I’d at least get a form reply. Do you think anyone actually reads the comments in surveys like these?

Let me be fair to Reichheld. At the end of the article he drops some critical pearls of wisdom about Enterprise’s survey system. It’s a phone survey, and information from unhappy customers is forwarded to the responsible branch manager, who then engages in service recovery actions with the customer, followed by root cause identification and resolution.

Let me be fair to Reichheld. At the end of the article he drops some critical pearls of wisdom about Enterprise’s survey system. It’s a phone survey, and information from unhappy customers is forwarded to the responsible branch manager, who then engages in service recovery actions with the customer, followed by root cause identification and resolution.