Surveys’ Negative Impact on Customer Satisfaction

Summary: A long understood, but seldom followed, truism of organization design is that reporting for operational control and management control should not be mixed. Tools designed to provide front-line managers information about operational performance will become compromised when used for performance measurement. This is true for customer satisfaction surveys used for operational control, including Reichheld’s Net Promoter Score®. It was intended to be an operational control tool, but when used for performance measurement, we can see the deleterious effects. Customer feedback surveys are one element in an organization’s measurement systems, and the survey program needs to be considered in full context to be sure the measurements are not corrupted, and — more importantly — that the results of the survey program don’t create customer dissatisfaction in the process of attempting to measure customer satisfaction.

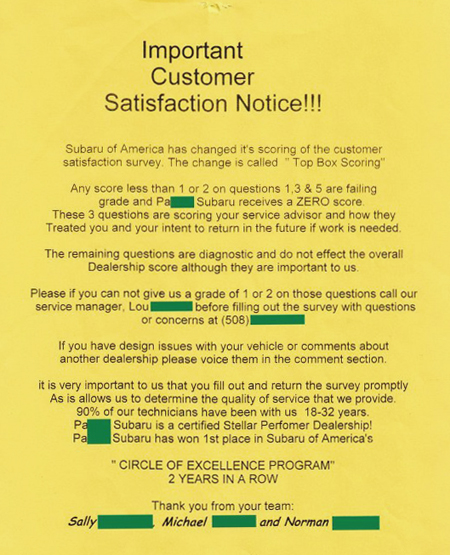

Nearby you’ll see an “Important Customer Satisfaction Notice!!!” that was attached to my repair bill from a local Subaru dealer. Take a read of it. Even though I do surveys professionally, I try to listen to my gut when I take a survey or see something like the flyer in the nearby image. How am I reacting to it? How would a “normal” person — that is, someone for whom surveying is not the center of his work life — react to this? What’s your reaction to it at both an intellectual level and a gut level?

[Let me note that I am a member of the Subaru cult. It’s the only car brand I’ve owned since 1977, and I even got my wife to buy one for her latest car. I am not bashing Subaru’s products here, but perhaps I am bashing their use of a survey mechanism. Besides, I suspect that this same approach is used by many, many other car dealership brands, but I only see Subaru’s business processes.] Your first reaction probably is that the writer’s English skills are woeful — and that’s being kind. With my professorial red pen, I count 16 editing corrections in one page. Three people had their names at the bottom of the page. (I blanked them out for privacy reasons.) Didn’t they proofread this? Maybe they did, which would be really sad. If I were the owner of this dealership, I would be embarrassed. The sloppiness sends a message about the dealer as a whole. It’s a window into the concern for quality at the dealership. One can only hope they are better, more careful mechanics than writers!

Your first reaction probably is that the writer’s English skills are woeful — and that’s being kind. With my professorial red pen, I count 16 editing corrections in one page. Three people had their names at the bottom of the page. (I blanked them out for privacy reasons.) Didn’t they proofread this? Maybe they did, which would be really sad. If I were the owner of this dealership, I would be embarrassed. The sloppiness sends a message about the dealer as a whole. It’s a window into the concern for quality at the dealership. One can only hope they are better, more careful mechanics than writers!

Your second reaction is probably something like, “Why are they telling me this now? Why didn’t they just do a better job in the first place? Do they expect me to now lie on the survey?” I’ve shown this sheet in my customer feedback workshop, and that is the type of reaction I’ve heard.

Another reaction I’ve heard is that this “Notice!!!” isn’t about customer satisfaction. It’s about the dealership’s scoring from those in headquarters. That led the readers to really question the sincerity of the motives.

Plus, they put the onus on me, the customer, to reach out to them to fix their problems to then get me to give them high scores.

Lastly, they sure are using a lot of loaded language to impress you after the fact!

As a teacher of over 23 years, I can relate to the feeling. I frequently get students approach me to say, “I’m concerned about my grade.” Many times it’s a part-time MBA student who adds, “I don’t get reimbursed in full by my employer unless I get at least a B+ in the course.” Want to guess when they approach me? Yes, in the last week of the semester just before the final exam. Basically, they’re asking me to not apply the same grading standards to them as to the rest of the class. My response is usually something akin to, “If this is so important, why didn’t you work harder during the semester? And why are you coming to me now when the semester is over?”

During the ’80s I developed management and operational reporting systems for Digital Equipment Corporation’s field service division in the US. One lesson that I learned over and over again is that a reporting tool can’t serve two masters. If it’s to be used for operational improvement, then it should be used solely for that purpose. Once those operational control reports get used for performance measurement purposes, the validity of the data will deteriorate. Those being measured could improve their performance to affect the reported numbers or they could manipulate the data collection and reporting systems to affect the reported numbers – or both. Trust me, the data collection systems will be manipulated. At DEC we called it “pencil whipping” the forms that the techs filled out.

Fred Reichheld, the father of Net Promoter Scoring®, lamented about how his child is being misused. An attendee at the NPS® conference in early 2009, posted a blog about Reichheld’s comments. Essentially, Reichheld had envisioned NPS® as a tool to drive change in the customer-facing operations. But it is increasingly used as a performance metric club to beat people up. Worse yet, many companies think their feedback surveys only need to contain the “one number they need to grow.” A one-question survey cannot serve as an operational control tool.

Colleagues of mine, Sam Klaidman and Dennis Gershowitz, point out in an article of theirs that this focus on getting good NPS® scores creates the impulse for front line managers to “fix” the survey score before the score is submitted, as shown above. The unintended consequence is that it creates a black hole in knowledge of what’s really happening at the front line. They refer to these as “gratification surveys.”

A survey program needs to be one element in a broader customer feedback strategic program. If you plug in just a survey without thinking more broadly, then you will get reactions such as seen in the example above. I am glad that the dealership is taking customer satisfaction seriously, but the approach they’re using just feels wrong. Why didn’t the service advisor ask me questions when he handed me my bill? Or someone could have called me the next day. However, make sure the person placing the call is a professional and has been trained. I have received those kinds of calls from car repair shops, and it was clear that the person placing the calls had not been trained. It was an awkward call that left me feeling more skeptical about the dealership. (It may have been this same dealership!) And don’t mention the survey. Once you mention the survey, you’ve crossed the line into manipulation. Subaru’s failure at the headquarters level was to implement the survey program without the additional elements of training those measured of what to do with the results, and to use this customer feedback system as a key element in their dealership reward system.

How would you react if you were getting beat up on survey scores? Perhaps the way this Subaru dealership has reacted: work the system. The Law of Unintended Consequences rears its ugly head. Ugh.

Have you found your survey program get corrupted when it started to be used for performance appraisal purposes? Let us know.

~ ~ ~

I received more response on this article than perhaps on any other one I’ve posted. Here’s one response.

This was my story one year ago. I took my car in for service for first time at this dealer. Price was outrageous and they did stuff not approved.

There was a copy of the customer survey form at the checkup desk, with a note to it attached saying they would get bad ratings if any area was not a 9 or above, so please fill it out that way basically. (can’t recall the exact wording, but that was the gist of it.) Then the gal checking me out told me the exact same thing — that I would be getting a survey from JD Power and they really wanted us to rate them 9 or above for them to keep their level rating.

Then, on my receipt, there was a note that if I would think of rating them anything below a 9 on any category to please call the Customer Service Manager. Well, I promptly gave him a call and told him that if they needed a 9+ so badly, perhaps they should try focusing more on service than just instructions about how to fill out the survey with the desired numbers. He apologized and offered to fix any of my service problems from the day before.

When those issues were resolved he called me to follow up. They were not resolved to my satisfaction and I told him so, and also told him I would not be giving the 9 scores he was looking for.

I never received a survey.

Penny Reynolds

Senior Partner, The Call Center School