Summary: Perhaps the most asked topic in the field of surveys, especially customer satisfaction surveys, is response rates.

- What’s a good response rate?

- What’s the statistical accuracy with a certain response rate?

But what is sometimes missed is that the response rate should not be the only concern. Bias in the response group should be an equally important concern. Lots of responses will give a good statistical accuracy, but it’s a false sense of security if there’s a sample bias. In particular, we’ll discuss the link between survey fatigue and non-response bias.

~ ~ ~

A client of mine posed the following question to me on the topic of survey response rates and statistical accuracy.

We launched our survey and have about 22% response rate so far. Using your response rate calculator, I find we currently have about an accuracy of 95% +/- 6%, and to get to a +/- 5% accuracy we’d need about 70 more responses. I also remember us discussing that a survey with >30% response rate is an indicator of survey health.

So, my question is how important is it to get to 30% when the accuracy factors are already met?

I get this type of question frequently in my survey workshops, so I thought it made good fodder for an article on the difference between survey accuracy and survey bias. Prepare to be upset. It is possible to have a high degree of statistical accuracy and yet have misleading, bad data. Why? Because you could have a biased sample of data.

Let’s define bias first — with its antonym. An unbiased survey would mean that those who completed the survey for us were representative of the population, which is our group of interest. Accordingly, we would feel comfortable, within some level of confidence, projecting the findings from the sample data to the population as a whole.

However, since we are not getting data from everyone, there will be some random error in our results. That is, the findings from our sample will not match exactly what we would get if we successfully got everyone in the population to complete the survey. That difference is known as sampling error, and the statistical accuracy tells us how much sampling error we may have in our data.

But bias is different, and high accuracy does not mean there’s no bias.

But bias is different, and high accuracy does not mean there’s no bias.

I work a lot with technical support groups. They frequently survey their customers after a service transaction to gauge the quality of service delivery, but usually some questions are included to measure views on the underlying product being supported. Are the findings about the product learned from a tech support survey a good unbiased indicator of how the entire customer base feels about the product? Probably not. The data are coming from people who have had issues with the product and thus needed support. It’s biased data.

Further, many tech support groups send out a follow-up survey for every incident. While you may complete them at first, after a while most of us would get tired at the constant requests for feedback, especially if we doubt they do anything with it. When might we then take the survey? When we’re motivated by a particularly good or bad experience.

The self-selection bias, also known as a non-response bias or participation bias, is now distorting the data. Those who chose not to respond are likely different from those who did.

A recent online article by ICMI (International Customer Management Institute) drew from a recent Associated Press article on survey fatigue. “The more people say no to surveys, the less trustworthy the data becomes, Judith Tanur, a retired Stony Brook University sociology professor specializing in survey methodology, told the Associated Press.”

Ironically, the author in that article then avers, “Social media is also a great channel for customer satisfaction feedback.” But this is simply trading one form of sample bias for another. Is the feedback garnered through social media representative of a company’s entire customer base? It is clearly a biased sample. A significant proportion of people have little or limited interest in the social media world.

This point was made in a February 11, 2012 article by Carl Bialik, The Numbers Guy of the Wall Street Journal. In his article, “Tweats as Poll Data? Be Careful,” notes:

Still, there are significant arguments against extrapolating from people who tweet to those who don’t: Twitter users tend to be younger and better educated than nonusers, and most researchers get only a sample of all tweets. In addition, it isn’t always obvious to human readers — let alone computer algorithms — what opinion a tweet is expressing, if there is an opinion there at all.

I do agree that we should use multiple research methods, including social media, to draw on each method’s strengths. But know your biases, and know that biases are just as important as statistical accuracy.

Many different ways exist to introduce bias into our data inadvertently – or intentionally if we’re trying to lie with our statistics! Yes, my Pollyannaish friends, people do intentionally skew survey data.

- We might use a survey method that appeals to one class of our population more than another. Think about who in your circle of friends or business associates is a “phone person” versus an “email person”.

- We might survey during some religious holidays, driving down responses from a group.

- If we’re sending invitations to just a sample and not to the entire population, we might not have done random sampling — or done it correctly — likely introducing a bias.

- Maybe account managers get to chose who in a customer site gets the survey invitation; guess who they’re going to cherry pick?

- Or maybe the car salesman gives you a copy of the survey you’ll be receiving explaining that he’ll get the $200 for his daughter’s braces if you give him all 10s.

All of these can introduce bias into the data set, but the non-response bias caused by survey fatigue is particularly perplexing because it’s near impossible to measure. How can we tell how the non-respondents differ from the respondents if we can’t get the non-respondents to respond?

Quite a Catch-22.

What we can say for sure is that

as the response rate drops, the likelihood of significant non-response bias grows.

There’s no magic threshold for a “healthy survey,” but a statistically accurate survey could have significant non-response bias — or other administrative biases. Higher response rates lower the impact of this self-selection bias.

In a future article I’ll discuss ways to combat non-response bias. But some of you are probably thinking, “How bad can this non-response bias be? Will it really affect decisions if I have a high statistical accuracy?”

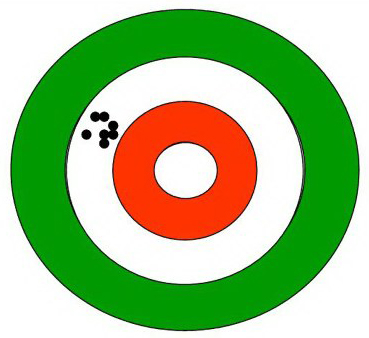

Here’s an example that helps explain bias versus accuracy, inspired by the elections currently occurring the in US. [Note this article was written in 2008.] Imagine we decided presidential elections based on the results from one state. We would certainly have enough votes for a statistically accurate result.

Take Wyoming. Over 254,000 people voted in the 2008 election. About 132 million people voted in the US in total. (Technically, the “population” in the surveying sense is larger, but it makes no difference in the results.) Wyoming voters alone would give a statistical accuracy of +/- 0.2% at a 99% confidence level. (Please note the decimal point!) That’s incredible statistical accuracy. Yet, Wyoming voted 65% to 33% for McCain over Obama. The nation as a whole voted 53% to 46% for Obama. Following the logic that only accuracy matters, wouldn’t it be a lot simpler to just have Wyoming vote than the entire nation? After all, we’d have highly statistically significant results with little sampling error — and think of all the money that wouldn’t be wasted on campaigns.

The liberals reading this (like my statistician brother-in-law) are screaming at me at how unfair that would be to let Wyoming pick our president. Okay. Let’s decide the election based on the voting in the District of Columbia. DC residents cast about the same number of votes as Wyoming, almost 266,000, in the 2008 election. Same accuracy. Same administration bias — and then some — but in the opposite direction. DC voted 93% to 7% for Obama. Now my conservative friends are screaming.

~ ~ ~

Statistical accuracy alone doesn’t tell the whole story of how sound our survey administration practices are. We have to challenge ourselves, asking whether we may have introduced a bias into the data from our survey administration practices.

Biases are truly the hidden danger to getting meaningful results for decision making.