Impact of Mobile Surveys — Tips for Best Practice

Summary: Mobile survey taking has shot up recently. When a survey invitation is sent via email with a link to a webform, up to a third of respondents take the survey on a smartphone or equivalent. Survey designers must consider this when designing their surveys since some questions will not display properly on a mobile device. This article presents the issue and some tips for good practice.

~ ~ ~

If you do any kind of design work that involves information displays on webpages, you know the challenges of designing when you don’t know the screen size that will be used. It makes you long for the days of green screens and VT 100s when the mouse was something that your cat killed and a trackpad was somewhere you ran.

Screen sizes and resolution always seem to be in flux as we’ve moved from desktops to laptops, netbooks, smartphones, tablets, and phablets.

As part of rewriting my Survey Guidebook, I have been talking with survey software vendors, and the dramatic rise of the survey taking via smartphones is a big issue. Roughly around one quarter to one third of survey submissions are coming via smartphone devices. Ouch!

The issue is: how does your survey render onto a smartphone? Website designers have tackled this with responsive website design, and the same issues apply for surveyors. But the issue is perhaps more critical for surveyors. While the webforms might be responsive to the size of the screen on which the survey will be displayed, the fact is some survey questions simply will not display well on a small screen.

The issue is: how does your survey render onto a smartphone? Website designers have tackled this with responsive website design, and the same issues apply for surveyors. But the issue is perhaps more critical for surveyors. While the webforms might be responsive to the size of the screen on which the survey will be displayed, the fact is some survey questions simply will not display well on a small screen.

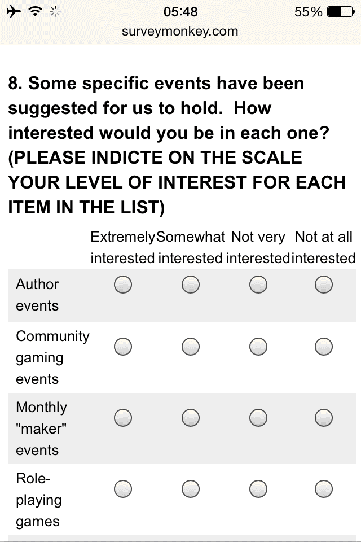

For example I love — or loved — to set up my questions in what’s called a “grid” format (sometimes called table or matrix format). It’s very space efficient and reduces respondent burden. However that question layout does not work well, if at all, on a phone screen, even a phablet.

Look at the nearby screen shot from a survey I took. Notice how the anchors — the written descriptions — for the four response options crowd together. Essentially any question where the response options are presented on horizontally may not display well.

The webform rendering may have implications for the validity of the data collected. If one person takes a survey with the questionnaire rendered for a 15 inch laptop screen while another person takes the “same” survey rendered for a 5-inch smartphone screen, are they taking the same survey?

We know that survey administration mode affects responses. Telephone surveys tend to have more scores towards the extremes of response scales. Will surveys taken by smartphones have some kind of response effects? I am not aware of any research analyzing this, but I will be on the lookout for it or conduct that research myself.

So what are the implications for questionnaire design from smartphone survey taking?

First, we have to rethink the question design that we use. We may have to present questions that display the response options vertically as opposed to horizontally. This is a major impact. If you are going to use a horizontal display for an interval rating question, then you should use endpoint anchoring as opposed to having verbal descriptors for each scale point. Endpoint anchoring may allow display of the response scale without cramped words. But make sure you have constant spacing or you’re compromising the scale’s interval properties!

Second, we have to simplify our questionnaires. We need to make them shorter. A survey that may be perfectly reasonable to take from a time perspective on the laptop with a table display will almost certainly feel longer to complete on the phone because of the amount of scrolling required. While smartphone users are used to scrolling, there must be a limit to people’s tolerance. A 30-question survey on a laptop might take 3 to 6 screens but take 30 screen’s worth of scrolling on a smartphone. You might be tempted to put one question per screen to limit the scrolling. However, the time to load each screen, even on a 4G network, may tax the respondent’s patience.

Third, beyond question design we should reconsider the question types we use, such as forced ranking and fixed sum. Those are especially useful for trade-off analysis to judge what’s important to a respondent. However, they would be very challenging to conduct on a small screen. So, how do we conduct trade-off analysis on a smartphone? I’m not sure. Probably using a multiple choice question asking the respondent to choose the top two or three items.

Fourth, extraneous verbiage now becomes even more extraneous. In my survey workshops I stress to remove words that don’t add value. With smart phone rendering, it becomes absolutely essential. Introductions or instructions that would cover an entire screen on the laptop would simply drive away a smartphone respondent. Even the questions should be as brief as possible as well as the response options. The downside is that we may be so brief as to not be clear, introducing ambiguity.

Fifth, the data should be analyzed first by device on which the survey was taken. Are the scores the same? (There are statistical procedures for answering that question.) If not, the difference could be due to response effects caused by the device or a difference in the type of people who use the each device. Young people who have grown up with personal electronic devices are more likely to take surveys on a mobile device. So are differences in scores between devices a function of respondents’ age or a function of the device and how it displays the survey (response effects)? Without some truly scientific research, we won’t know the answer.

Not analyzing the data separately assumes the smartphone survey mode has no response effects. That could be a bad assumption. We made that same assumption about telephone surveys, and we now know that is wrong. Could organizations be making incorrect decisions based upon data collected via smart phones? We don’t know but it’s a good question to ask.

In summary, the smartphone medium of interaction is a less rich visual communication medium than a laptop, just as telephone interviews are less rich since they lack the visual presentation of information. If we’re allowing surveys to be taken on smartphones, we must write our surveys so that they “work” on mobile devices — basically the lowest common denominator. Ideally, the survey tool vendors will develop ways to better render a survey webform for smartphone users, but there are clearly limits and the above suggestions should be heeded.