Measuring Service Effectiveness

“How are we doing?” It’s a natural question for an individual or an organization. In some cases the answer is easy because the objective criteria for success are simple, obvious, easy to collect, and easy to analyze. For example, a bricklayer is measured on the number of bricks laid in a day and the quality of the workmanship.

How to measure service organizations is not simple, obvious, or easy to evaluate. In good part this is due to a service organization’s multiple objectives — which often are in conflict. Service organizations need to be:

- Efficient in their use of resources

- Effective in the eyes of the customer. (And just to complicate matters, many service organizations have multiple customer constituencies, presenting more opportunities for conflicting effectiveness objectives!)

Why are these service measurements in conflict? Typically, to be more effective means having a buffer of resources to deal with unexpected demand. To make a rational trade-off decision requires good measurement of both service efficiency and effectiveness. Yet, efficiency metrics typically win out. Why? Because efficiency is easy to define and measure with the operational systems we have in place to manage the service process. For example,

- Efficiency: Calls per day per service agent. Piece of cake to measure

- Effectiveness: Quality of the service delivered. Hmmm… how do we even define quality?

The Balanced Scorecard concept drives home this need for internal measures of service efficiency balanced with external measures of service effectiveness to keep the service organization aligned with corporate goals. But what are good service measurements? How do we measure service effectiveness? How do we measure how good we are in the eyes of the customer?

Two broad approaches exist, internal and external service measurement techniques. We’ll address each below with their respective approaches.

Internal Service Measurement Techniques. By “internal” measurement techniques, we mean that we don’t involve the customer directly in the measurement process. Rather, employees or contractors put themselves in the shoes of the customer. Two internal measurement techniques can be applied.

- Call Monitoring. This technique is used in call centers. Someone listens in on the service interactions and judges the quality of the service delivered along a pre-established score sheet. The monitor might do more than listen in; they may also be “shadow monitoring” by watching the agents computer screens remotely.The advantages of the technique are several fold. First, it is unobtrusive. We don’t burden the customer, and the service agents do not know when they are being monitored. Second, evaluation can be done in real time. Thus, identified process issues can be addressed and remedied quickly before more customers become dissatisfied. Third, every service agent is measured against established criteria.The technique also has shortcomings. First, the monitors are applying their own qualitative assessments of the agents’ words and deeds. Multiple monitors are needed to ensure fairness. Second, the monitors must be trained and calibrated to be sure they are all measuring the same way. Most importantly, the criteria on the score sheet must reflect what is truly of concern to the customer. Some score sheets have arbitrary requirements that may not be important to customers, such as greeting the customer by name at least three times. Also, the score sheets typically derive an overall score by applying weights to the different sections. How were these weights derived? Typically, they are a managerial judgment, perhaps driven by short-term hot buttons, and not derived statistically.This technique, implemented differently, could be done in service settings other than call centers, though it may be more difficult to be unobtrusive and anonymous. For example, a field technician monitored during a visit to a customer site has obvious shortcomings.

- Mystery Shopping. Most of us have heard of this technique since it’s used heavily in consumer industries and search engine ads barrage us to “get paid while you shop or eat.” However, the concept is applicable in most any service environment. (I conducted one of these studies for a technology vendor to see how their warranty processes performed in comparison to chief competitors. It was perhaps my most fun project I have ever done.) With mystery shopping a contracted person pretends to be a customer, and tries to “exercise” the various paths possible in a service interaction. The “shopper” has a score sheet similar in concept to call monitoring score sheet.The primary advantage of this measurement approach lies in the ability to explicitly test service scenarios to see how the agents — and the system as a whole — perform. In other measurement techniques, you take what you get. Also, while a shopper does waste a service agent’s time, no real customers are directly affected.The shortcomings start with the cost. Clearly, this process is labor intensive. It would be prohibitively expensive to generate several “shopping” experiences with each service agent. Thus, this technique is not suitable for quality control monitoring. And just as with the call monitors above, the shoppers need to be trained and calibrated, the score sheet needs rigorous development, and summaries must be legitimate.

External Service Measurement Techniques. In contrast to the internal techniques where we simulate being a customer, with external measurement processes we capture feedback directly from the consumers of the service.

- Unsolicited Comments. Our first external measurement technique is one that just appears. Complaints or compliments where the customer took the initiative to tell us about their feelings. Few customers will take the time to send feedback (except people like me). So, it behooves an organization to make it easy to deliver comments. Toll-free hot lines and survey forms off the company’s internet home page are ways to promote this free research.The key advantage is cost. The book, A Complaint is a Gift, makes the argument that a complaint is free market research. It’s not free if we create systems to encourage comments, but it’s still very inexpensive for the value received. If someone took the time to complain, it probably has a germ of truth to it. They are telling us where our system failed or supported them. And some people will expound in great detail (like me).The shortcomings are high. Clearly, these comments represent extreme positions. They are not typical and need to be interpreted as such. Many companies post survey forms on their web sites and believe the results can be generalized to all customers. That is wrong.

The remainder of our external measurement techniques are where we actively solicit feedback from our customers.

- Personal Interviews. A structured interview is perhaps the simplest way to gather information from customers. These could be done through email exchanges, by phone, or preferably in person. Richer contact media allow us to sense feelings beyond just the words. With phone we get intonation. In person, we get body language.The key advantage of interviews is the richness of detail they provide. We learn not just the type of issue – positive or negative — but also the detail behind it. Thus, we can better develop corrective action plans. If we use a purposeful selection process to identify people for interviewing, then we are capturing feedback not just on the extremes, but covering the spectrum of customer feelings. However, the number of interviews will not likely lead to statistically significant findings. That’s not the goal.Interviews have their challenging requirements. Note the term “structured interview.” For the data to have meaning we must collect it in at least a semi-structured approach. This means developed a set of questions to guide the interview. Good interviewing skills are needed and coordination among multiple interviews. Clearly, this is a labor intensive process, especially for in-person interviews.

- Focus Groups. Also, known as “small group interviews”, focus groups share most of the strengths and weaknesses of personal interviews. Rather than interviewing each person individually, we interview them as a group over the course of an hour or two.The key advantage focus groups provide is the interaction among the participants, making for even richer detailed feedback than with individual interviews. We really get the “why behind the what” of customer issues. We also have some efficiency since we’re gathering feedback from a dozen or so people at once.The key challenges are logistical. Planning and execution are vital. The customers must be clustered geographically, unless you use an online discussion group to serve as a web-based focus group. The moderator’s ability to facilitate a good discussion is absolutely critical.

- User Group Feedback. User group meetings provide an opportunity for a wealth of information collection. Gathering feedback at user group meetings through interviews or focus groups is just plain common sense. We have a group of people who have a strong commitment to our products. And they are likely to be very willing to tell you what they feel and what they’d like to see.That strength is also the weakness of the approach. They are current customers and will drive product ideas toward improving their own use of the product rather than product ideas that will appeal to an expanded market. These customers may also now feel ownership for product direction and be disappointed if they don’t see what they proposed. Positioning is critical with user groups.

- Mass Administered Surveys. I suspect that most of you reading this article expected it to focus on customer surveys. Surveys are great at telling us how our customer base as a whole feels — if done properly. We gather structured data (hopefully) from a sample of our “population” and can then generalize the results from the sample to the population.The key benefit of the survey technique is that it provides an efficient means of generating a broad overview of how our customers feel, identifying strong and weak points in the service system. Incident surveys (also known as event or transactional survey) can flag customers in need of a service recovery. Surveys are not good at generating the detail behind the feelings. (Hold that question…)To generate meaningful data, surveys have strong requirements. The survey questionnaire must avoid introducing bias, the questions must reflect the true issues of concern to customers, and the resulting data must support the desired analysis. The survey administration has to avoid biasing results and we must get enough responses for statistically significant findings. Finally, the survey data analysis has to be executed correctly to give voice to the message buried in the numbers.

Learn how to develop meaningful surveys through our Survey Workshop series.

We’ve covered a lot of ground here. Your question may well be, “So, which measurement process is best?” The answer is: All of them and none of them.

Every research technique has strengths and every research technique has weaknesses.

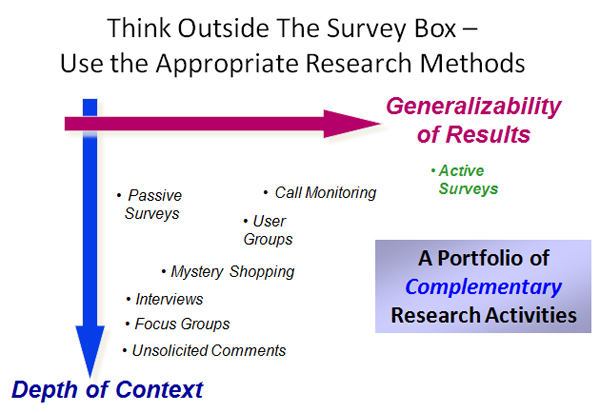

Re-read the above descriptions and you’ll see that some techniques (e.g., focus groups and interviews) are good at generating detail-rich findings while others (e.g., surveys) are good at getting summary findings that represent our customer base as a whole. What’s needed is a portfolio approach. Just as your financial portfolio should have a balance of investment instruments, so too should your service measurement approach.

The nearby chart makes this point. Proper interpretation and use of the findings from a research technique means understanding its place in the research spectrum. Rigorous application of a portfolio of complementary service effectiveness measurement techniques helps ensure we truly understand how our customers view us and how to address those issues. In short, our Balanced Scorecard becomes balanced properly.