Survey Question Ambiguity:

What Exactly Does the Public Support?

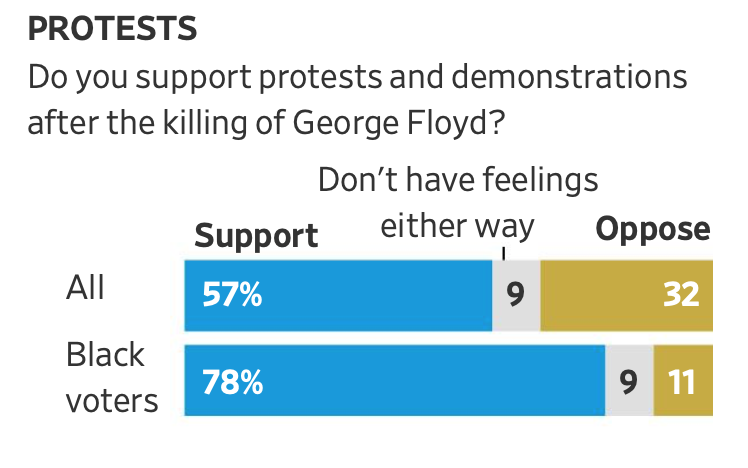

A WSJ NBC poll from July 2020 indicated that a majority of registered voters support the protests in the wake of the George Floyd killing.

But is that what the poll actually measured?

A recent Wall Street Journal and NBC poll (released on July 21, 2020) looked at public support for the protests in the wake of George Floyd’s killing. Question 21 in the telephone survey asked:

Q21a. Do you support the right to peaceably protest for racial equality?

Q21b. Do you support the specific goals of the Black Lives Matter protestors?

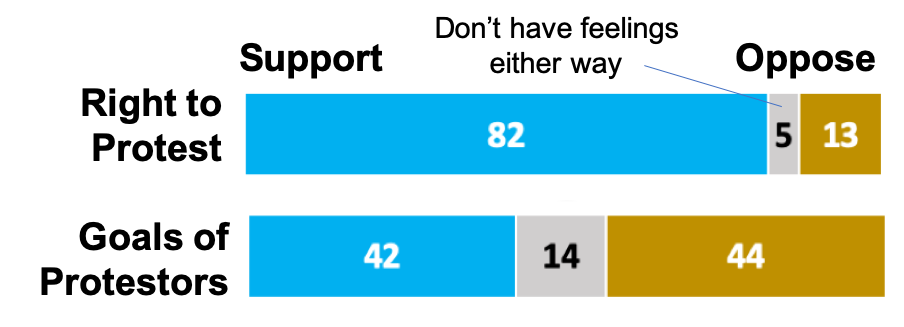

The results (shown in nearby chart) indicate significant divergence between support for the first amendment right for peaceful protest versus support for what the protesters say they want.

WHOA. Not so fast !!

I just lied to you. But with good reason. It was the best way to teach the impact of ambiguous survey question wording. This poll contains valuable lessons for our organizational surveys — beyond the ethical question of my lie.

Here’s the actual question that was asked, how the results were graphically presented in the article, and the table as published with the full results.

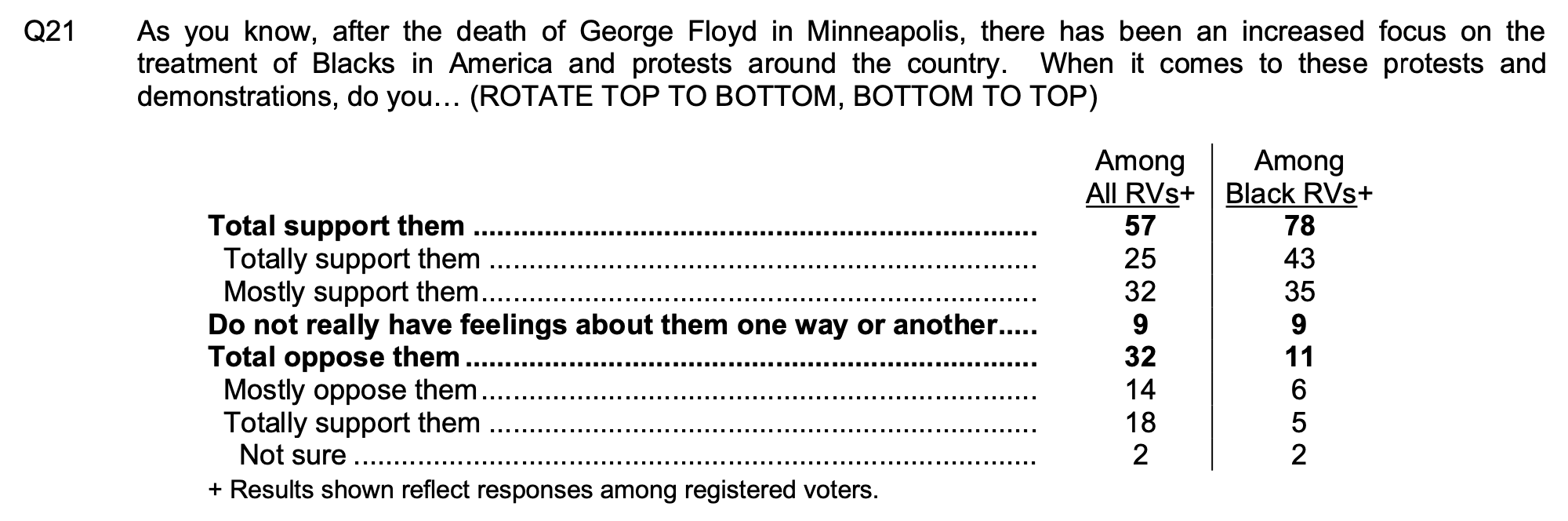

Q21. As you know, after the death of George Floyd in Minneapolis, there has been an increased focus on the treatment of Blacks in America and protests around the country. When it comes to these protests and demonstrations, do you support or oppose them? [rotate order]

Follow up: Would you say you totally or mostly support [oppose] them?

[Note: from the information presented, I am not 100% clear how the scale was presented to the respondent. This my best guess.]

WSJ Poll Protest Support Level

Q21 Results Breakdown

Minor snits: The perceptive reader will notice that “Totally support” is listed twice in the data table. I did not edit that; it’s a screenshot from the WSJ page. Those who wrote up the poll results and proofed it were just plain sloppy. I did grimace at the question’s sentence structure. It has many constructs presented using contorted grammar; try diagramming that sentence. Some respondent confusion is inevitable.

Now to the main point. Notice the inherent ambiguity in the question as asked. I teased it out with my “lie” at the top. A reasonable person could:

While some may want to conflate the concepts, those concepts are distinctly different.

The question phrasing chosen doesn’t make that distinction, which is not a trivial or nuanced issue.

Why did these professional surveyors chose that question phrasing? Were they purposefully trying to conflate the two in the minds of the respondents or just sloppy? Given the awful grammar, I lean toward the latter.

In fact, it appears that respondents did make the distinction –- and maybe an additional one. Look at the detailed results for the poll. While 57% “support” the protests (see above table of results) notice the split between:

I will suggest that the folks who “mostly support” the protests didn’t “totally support” them either because of:

Think about it… what does “mostly support” mean? Unfortunately, the pollsters lacked the inquisitiveness to ask a follow-up question: “why only mostly support?”

The breakdown between totally and mostly support was NOT reported in the WSJ article. You have to find the detailed results to see this – and the article provides no direct link to the detailed backup that I could find. The graph could have readily used 2 shades of blue and brown to show the breakdown. But they chose not to. Why?

In other words, while I lied to you at the start of this article, those who designed the questionnaire and reported the results misled the readers through their question wording and display of findings.

The survey designers chose a badly truncated scale. As a respondent, you could “mostly” or “totally” support [oppose] the protests. But couldn’t a respondent legitimately only “somewhat” support or oppose the protests? With this scale, the respondents’ options are Totally >> Mostly >> Neutral. The cognitive difference between Totally and Mostly is small. Whereas, the gap from Mostly to Neutral is huge.

The truncated scale pushes respondents to more extreme responses.

And notice in the table reporting results the sum of Totally and Mostly is reported as “Total Support.” Could they be more confusing in their verbal and graphical presentation? Some good lessons here for data vizualization!

Running your own survey programs? Learn how to do it right from us — the premier worldwide trainers for survey design & analysis.

Featured Classes:

It goes without saying that there’s a great deal of social pressure to support the BLM protests, lest one be “cancelled.” This is a form of conformity bias.

Notice the combined impact of the truncated scale and the conformity bias. The desire to conform means we lean toward supporting the protests, but we can’t just “somewhat support” them. We have to “mostly support” them. And then that is reported as “Total support.”

Ya gotta love it.

Further, the immediately preceding 7 questions were about race relations and racial discrimination. So, the “mental frame” created in the survey process was that the protests were about race relations. This is a good example of a survey’s sequencing effect enhancing the conformity bias.

The question – and the whole survey – bypass a byproduct of the protests: the violence, looting, and increased crime seen in many cities, which has continued for months after Floyd’s killing as I write this in late July. That destruction has nothing to do with the protests for racial equality, but the protests seemed to have opened the floodgates for those with other agenda.

Here’s the point. As surveyors and researchers whether for public opinion research or organizational issues, our goal should be to properly inform readers and decision makers about the state of opinion in our research population. That requires asking the right questions the right way and honestly reporting the results. This poll fails to do that.

In my classes I see many organizational surveys where the respondent is “led” to a desired response using any of a number of techniques, including ones discussed here. Some of these might be innocent mistakes; some not.

If you purposely engaged in deception in a survey for your company or organization, then you should be encouraged to find new opportunities. If it was inadvertent, then take my survey training class!

Request our white paper that discusses key points to follow for a better survey program.

Get our Excel-based calculator. It can also be used to gauge statistical accuracy after the survey has been completed.