Summary: Charming, posh hotels may not have charming surveys if they have been designed by those lacking significant survey questionnaire design experience. This article reviews the an event survey for a hotel stay at a hotel that is part of the Relais & Châteaux Association. We see many shortcomings in the survey design, some due to translation issues. This survey article is a collaboration with OmniTouch International’s CEO, Daniel Ord, who personally experienced this survey.

~ ~ ~

Most people, including those of us who are proud to be professionals in the Customer Service field, would assume that the more luxurious the brand is, the more likely the customer survey processes would represent the pinnacle of achievement. Unfortunately, paying more money (in this case for a luxurious hotel stay) did not equate to a superior survey program. We’ll open with some background and then look at the survey.

Background

Over the year-end holidays, Daniel and his spouse decided stay at the same lovely restored castle in Germany where they had spent our honeymoon. The castle cum hotel is part of the Relais & Châteaux Association, which is an exclusive collection of 475 of the finest hotels and gourmet restaurants in 55 countries.

Daniel booked the stay online from their home in Singapore. The communication and service was impeccable. In fact, the Receptionist indicated that if they wanted to enjoy the Gourmet Restaurant, they should change the dates of the stay to ensure that the restaurant would be open. So Daniel delayed their arrival date one day later than their original plan — and smiled at the good fortune.

On the morning of checkout, December 23rd, Daniel received an email invitation from the Association to comment on the stay at the hotel that commenced with the evening of the 21st December. However, he had changed the reservation to the night of the 22nd December and in fact, had not even checked out yet.

So given how wonderful the stay had been up to that point, they were a little surprised at the perceived “urgency” to complete a survey before the experience had been completed! Obviously, this was a process or administrative error, but the industry-based customer-service mindset kicked in and wondered how the reservation system could be “correct” but the survey invitation timing be “incorrect”. They decided to complete the survey only upon leaving the property and thus give it the proper attention at their next destination.

The Online Survey Experience

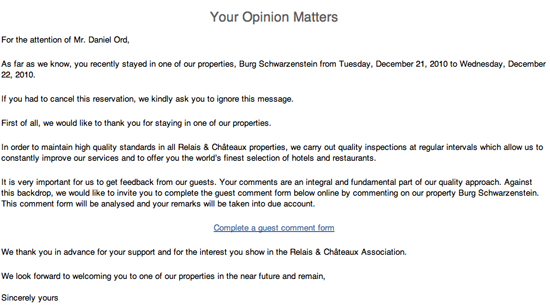

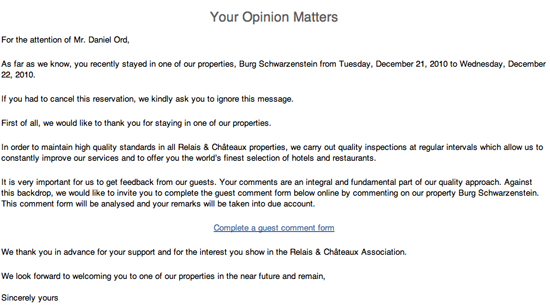

Before even getting to the survey itself, the survey invitation (see nearby) contains some odd wording and quite frankly is off-putting. A key purpose of the invitation is to motivate the respondent to take the survey. This invitation doesn’t pass that test.

Before even getting to the survey itself, the survey invitation (see nearby) contains some odd wording and quite frankly is off-putting. A key purpose of the invitation is to motivate the respondent to take the survey. This invitation doesn’t pass that test.

- Look at the opening sentence. “As far as we know, you recently stayed in one of our properties” on such-and-such date. Daniel’s initial reaction was, “You are darn right I stayed at this property — and I have the American Express bill to prove it!” Being greeted by name was a positive, but the next wording was very odd. Shouldn’t they know? If they wanted to confirm the information on record, then they should have just asked for a confirmation. Perhaps this was an issue of translation.

- The second line makes you really wonder. “If you had to cancel this reservation, we kindly ask you to ignore this message.” Daniel’s gut reaction, “If they don’t even know if I was an actual ‘guest’ then I am not very motivated to tell them how to improve.” It’s pretty clear that their reservation system and survey system are not tightly linked, but it leaves the guest wondering how organized they are.

- The third line indicates that they conduct “quality inspections at regular intervals” — but what is unclear to Daniel, the customer, is if he is part of this quality inspection process or whether this refers to inspections done by Association inspectors. More questions were raised in his mind by this phrase rather than answered.

Only in the last paragraph of the Survey invitation does the Association (finally) state that “Your comments are an integral and fundamental part of our quality approach.” Ah, now, after reading through the entire invitation, did Daniel understand where he fits into the picture.

Now onto the Survey Itself!

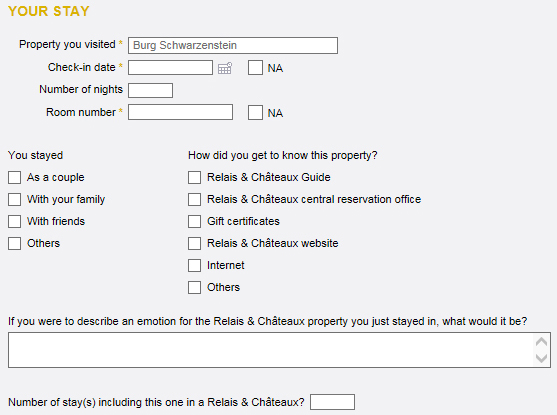

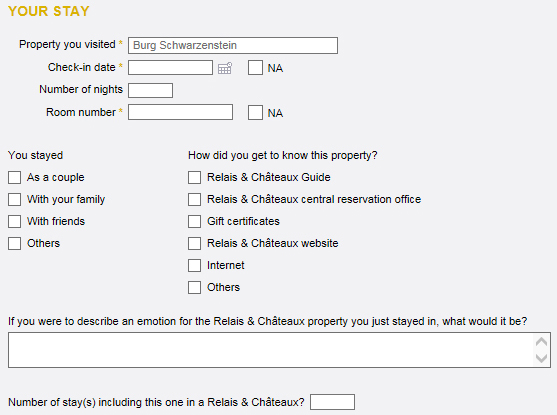

First of all, notice some graphic design features (which admittedly are hard to grasp from the individual screen shots here). Sections are blocked off with gray background, which is a nice design touch to organize the respondent. But there’s an opening title, “Your Opinion” followed immediately by a section on “Your Stay” with an odd juxtaposition of the two heading titles. More importantly, “Your Stay” solicits basic details of the stay, not opinions. Did anyone proof the layout?

The “Your Stay” section requires confirmation of place and date details for the stay, both auto-filled, but editable. Given these fields, the survey invitation certainly could be reworded. Note that they ask for the guest’s room number. Room Number and Number of Nights in the stay should be in the hotel’s transactional data, so why ask it here? Daniel understood why they wanted the room number — to address any stated issues with the specific room — but he had a gut-level reaction to what felt like an invasion of privacy. The questions got Daniel thinking in ways that run counter to the goal of getting honest feedback. In other words, it activated a response bias. As a rule, demographic questions should go at the end of the survey for exactly this reason.

The “Your Stay” section requires confirmation of place and date details for the stay, both auto-filled, but editable. Given these fields, the survey invitation certainly could be reworded. Note that they ask for the guest’s room number. Room Number and Number of Nights in the stay should be in the hotel’s transactional data, so why ask it here? Daniel understood why they wanted the room number — to address any stated issues with the specific room — but he had a gut-level reaction to what felt like an invasion of privacy. The questions got Daniel thinking in ways that run counter to the goal of getting honest feedback. In other words, it activated a response bias. As a rule, demographic questions should go at the end of the survey for exactly this reason.

Another translation and/or design issue can be seen with the “You stayed” question. We like the complete-the-sentence question structure used here, but the structure falls apart with “Others”. Besides, what does the “Others” Option mean and why is there no interest in asking for details? We can infer the demographic groups of interest to the Association, but it seems odd that other groups are not of interest.

The next question appears to be another translation issue. “How did you get to know this property?” The smart aleck in us wants to respond, “By staying at the hotel, of course.” Much better phrasing would be, “How did you learn about this property?” Again, look at the checklist. It is very focus on Relais & Châteaux information sources. Do these options meet their research objectives? We cannot answer that, but we question it.

Next they ask, “Number of stay(s) including this one in a Relais & Châteaux?” Previously, they’ve used the term, “properties” without reference to Relais & Châteaux. “Properties” should be included here to avoid confusion. However, this is another data point that should be in their customer records. Daniel said he was tempted to enter a larger number so his responses would carry more weight.

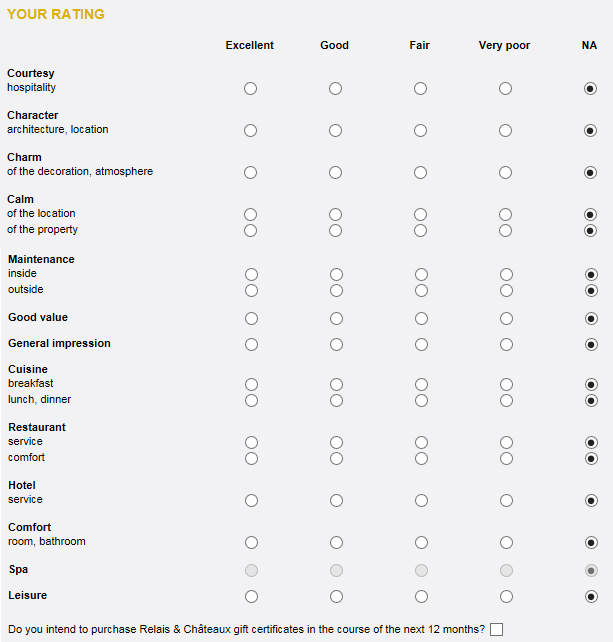

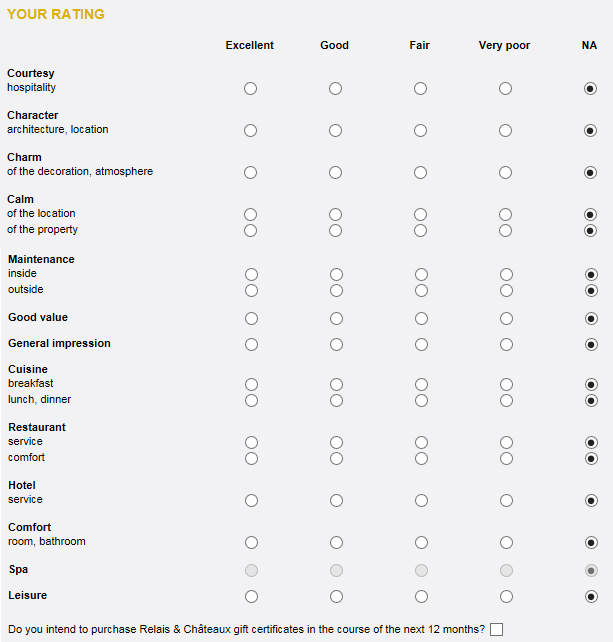

In the next section they’re asking for “Your Rating” on aspects of the stay. First, note the scale. The difference between Very Poor and Fair is huge. If you thought something was poor, how would you score it?

Next, look at the selection of items on which they want feedback. What’s missing are the various customer touchpoints, e.g., making the reservation, check in at reception, concierge, check-out. They apparently assume that their service is so consistently good that there’s no need for a feedback check on its quality except for the very broad question on “Hotel – Service”.

Next, look at the selection of items on which they want feedback. What’s missing are the various customer touchpoints, e.g., making the reservation, check in at reception, concierge, check-out. They apparently assume that their service is so consistently good that there’s no need for a feedback check on its quality except for the very broad question on “Hotel – Service”.

The layout here is also puzzling. There appear to be categories (Courtesy, Character, Charm, etc.) and then in most places one or two attributes to be measured. We also again see some apparent translation issues that create ambiguity. “Calm” of the location and of the property is an odd phrasing, as well as “Charm” of the “decoration”, “Leisure”, and even “Cuisine”. We are unclear about the distinction between “Calm of the location” versus “Calm of the property”. What is meant by “Maintenance”, which has an industrial tone, and “inside” and “outside” of what – one’s room or the hotel? “General impression” of what?

We also see double-barreled questions — asking two questions at once. “Character of the architecture” is different from “character of the location”, depending on your interpretation of “location”. What if there were more than one restaurant, which there were, and you ate in more than one?

Overall, many of the questions are very unclear. This section reads like an initial rough draft badly in need of revision cycles and pilot testing.

They end the section asking, “Do you intend to purchase Relais & Châteaux gift certificates in the course of the next 12 months?” with a checkbox as a vehicle for the respondent to indicate something, but we’re not told what. “Yes” needs to be put next to the box. At best, it is an odd placement for the question. Is it meant as an overall indicator of satisfaction with the property? If so, it seems like an odd one, especially given the phrasing. Usually, we ask future intent questions on a scale using the phrasing, “How likely are you to…” Daniel felt strongly that this question would be best in a follow-up survey, not in the feedback survey.

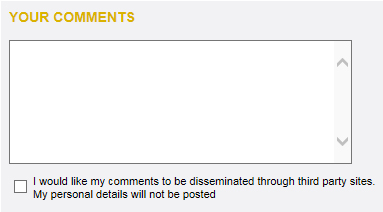

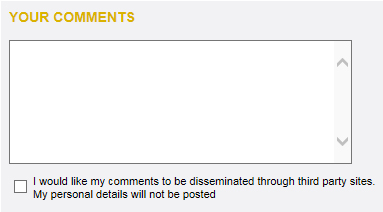

Next we have one comment box with no phrasing to push for improvement suggestions or the like. Remember that a reservations agent made a very helpful suggestion. Without a prompt, such as, “Did anyone deliver exceptional service to you?” that aspect of the transaction might be forgotten when providing comments.

Next we have one comment box with no phrasing to push for improvement suggestions or the like. Remember that a reservations agent made a very helpful suggestion. Without a prompt, such as, “Did anyone deliver exceptional service to you?” that aspect of the transaction might be forgotten when providing comments.

Then, we encounter a bizarre and horribly phrased statement preceded by a checkbox. “I do not agree to the passing on of my comments in anonymised form to third-party websites (i.e. your personal information will not be passed on, only your comments about the property)”. Please read that two or three times and see if you can fathom the impact of checking or not checking the box. What if 1) you do not want your comments passed on to third-party sites with attribution and 2) you do not want your personal information passed on either? Never use double-negative sentence construction. See why?

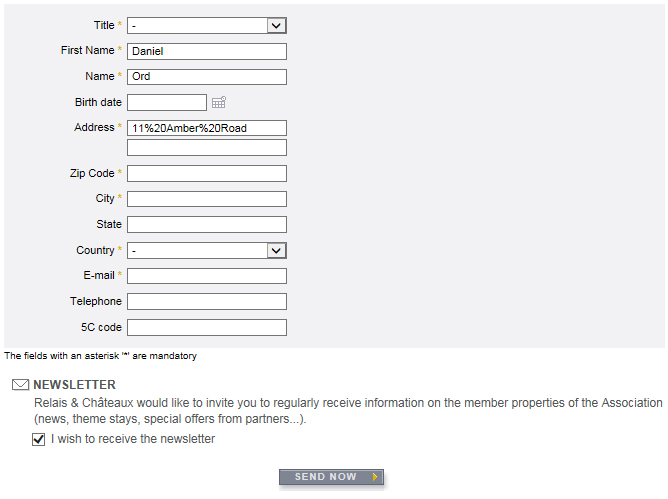

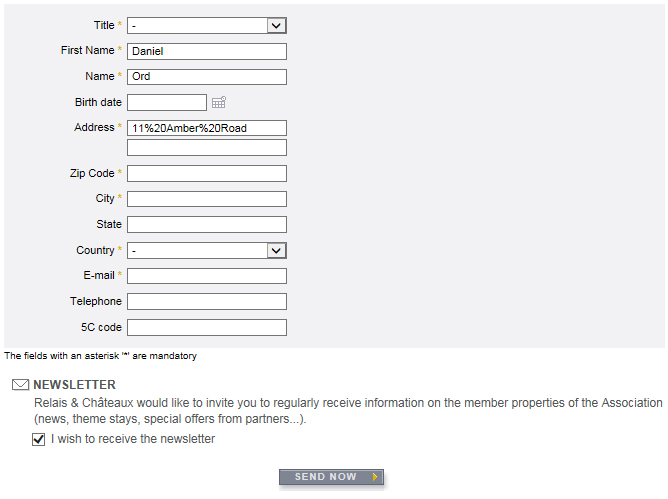

Next we encounter a section box with no title. Why? It has a whole series of required fields for all your personal information. You do NOT have the option of submitting this survey anonymously, and after that highly ambiguous preceding question, the likelihood of closing the web browser window without submitting our review is now extremely high.

Next we encounter a section box with no title. Why? It has a whole series of required fields for all your personal information. You do NOT have the option of submitting this survey anonymously, and after that highly ambiguous preceding question, the likelihood of closing the web browser window without submitting our review is now extremely high.

Why would they ask this? The survey is not anonymous. In the URL for the survey screen, Daniel’s name was readily visible. And what is a 5C code? Daniel knows. Fred has no idea. Never use terminology that some survey respondents may not understand.

At the bottom of all this, as if they are trying to hide something, they finally get around to telling the respondent that all fields marked with an asterisk are mandatory. That should be at the beginning.

The end of the form has disclaimers about the use of personal information. But again, these statements can create more confusion given the earlier question.

In summary, you can see that even a simple, one-screen hotel-stay survey requires a degree of rigor if you’re going to develop meaningful, actionable, accurate data — and not tick off your customer! The designers of the survey instrument have introduced a tremendous amount of instrumentation bias and activated response bias that compromise the validity of the data collected.

Before even getting to the survey itself, the survey invitation (see nearby) contains some odd wording and quite frankly is off-putting. A key purpose of the invitation is to motivate the respondent to take the survey. This invitation doesn’t pass that test.

Before even getting to the survey itself, the survey invitation (see nearby) contains some odd wording and quite frankly is off-putting. A key purpose of the invitation is to motivate the respondent to take the survey. This invitation doesn’t pass that test. The “Your Stay” section requires confirmation of place and date details for the stay, both auto-filled, but editable. Given these fields, the survey invitation certainly could be reworded. Note that they ask for the guest’s room number. Room Number and Number of Nights in the stay should be in the hotel’s transactional data, so why ask it here? Daniel understood why they wanted the room number — to address any stated issues with the specific room — but he had a gut-level reaction to what felt like an invasion of privacy. The questions got Daniel thinking in ways that run counter to the goal of getting honest feedback. In other words, it activated a response bias. As a rule, demographic questions should go at the end of the survey for exactly this reason.

The “Your Stay” section requires confirmation of place and date details for the stay, both auto-filled, but editable. Given these fields, the survey invitation certainly could be reworded. Note that they ask for the guest’s room number. Room Number and Number of Nights in the stay should be in the hotel’s transactional data, so why ask it here? Daniel understood why they wanted the room number — to address any stated issues with the specific room — but he had a gut-level reaction to what felt like an invasion of privacy. The questions got Daniel thinking in ways that run counter to the goal of getting honest feedback. In other words, it activated a response bias. As a rule, demographic questions should go at the end of the survey for exactly this reason. Next, look at the selection of items on which they want feedback. What’s missing are the various customer touchpoints, e.g., making the reservation, check in at reception, concierge, check-out. They apparently assume that their service is so consistently good that there’s no need for a feedback check on its quality except for the very broad question on “Hotel – Service”.

Next, look at the selection of items on which they want feedback. What’s missing are the various customer touchpoints, e.g., making the reservation, check in at reception, concierge, check-out. They apparently assume that their service is so consistently good that there’s no need for a feedback check on its quality except for the very broad question on “Hotel – Service”. Next we have one comment box with no phrasing to push for improvement suggestions or the like. Remember that a reservations agent made a very helpful suggestion. Without a prompt, such as, “Did anyone deliver exceptional service to you?” that aspect of the transaction might be forgotten when providing comments.

Next we have one comment box with no phrasing to push for improvement suggestions or the like. Remember that a reservations agent made a very helpful suggestion. Without a prompt, such as, “Did anyone deliver exceptional service to you?” that aspect of the transaction might be forgotten when providing comments. Next we encounter a section box with no title. Why? It has a whole series of required fields for all your personal information. You do NOT have the option of submitting this survey anonymously, and after that highly ambiguous preceding question, the likelihood of closing the web browser window without submitting our review is now extremely high.

Next we encounter a section box with no title. Why? It has a whole series of required fields for all your personal information. You do NOT have the option of submitting this survey anonymously, and after that highly ambiguous preceding question, the likelihood of closing the web browser window without submitting our review is now extremely high.