Summary: The survey critiqued here is an instrumentation bias example, showing how to support an argument with survey statistics. Rather than a survey that is an objective collector of data to understand public opinion, the survey design accomplishes the purpose of driving public opinion. This is achieved by the Bolton Local Historic District Study Committee through intentional instrumentation bias, as described.

~ ~ ~

Some of the more fun surveys for me to critique are ones from organizations that are trying to generate data to argue some point of view. The purposeful data are usually generated through some combination of intentional instrumentation bias, especially loaded and leading wording and administration bias as I teach in my survey workshop series. I’ll call them advocacy surveys. We see these types of surveys from advocacy groups and public policy organizations — and politicians!

Instrumentation Bias: A survey instrument or questionnaire that does not capture data to properly reflect the views of the respondent group contains an instrumentation bias. Many types of errors — whether intentional or unintentional — can lead to instrumentation bias.

Loaded language and leading wording that drive the respondent to particular responses are two examples of instrumentation bias.

Some of the surveys can be downright hysterical in the loaded and leading language they use. Some are more subtle to the point where the respondents and the consumer of the findings don’t recognize the manipulation. It’s akin to the question of how to lie with the statistics that are pre-existing, but here the data are manufactured to achieve a goal. We may say this is how to lie with survey statistics.

A true researcher develops a hypothesis or research question and then conducts research that generates valid, objective data to test the hypothesis and draw conclusions. That’s the scientific method. Sometimes the data do not support the hypothesis, and the researcher then looks for a hypothesis that the data suggest, which may prompt additional research to confirm. This, in fact, happened to me in my doctoral research.

In these other cases, the “conclusion” has already been made before the research is performed. The goal is to generate data to support the conclusions, and probably to avoid generating data that may confound the preset conclusion. I recently got one of these in my home town of Bolton, Massachusetts from the Local Historic District Study Committee.

I must admit upfront that my objectivity in critiquing this survey was challenged since it quite literally hits close to home, which I’ll explain at the end of this article. Briefly, I disagree with the underlying premise of those conducting the “study.” I present my biases here so you can filter out how, if at all, they have colored my critique. That’s being intellectual honest. I am confident in my professional objectivity in critiquing the survey practices described below.

~ ~ ~

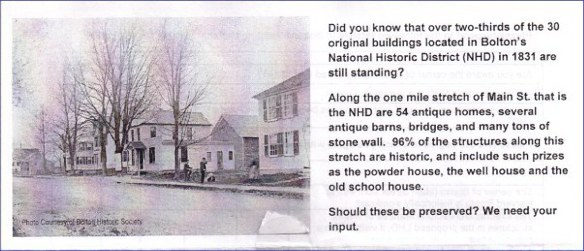

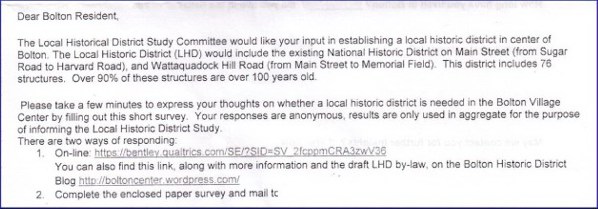

I received a one-page, double-sided mailing early in the week of January 23, 2012 from the Local Historical District Study Committee, a group established by our selectman. The call to action to get you to open the mailing lays out how many old structures lie in Bolton’s National Historic District. “Should these be preserved? We need your input.”

We don’t learn how many historical structures have been destroyed or altered in the past 10, 20, or 30 years, but the direct implication is that they are threatened. Even the website to which one is directed later contains zero data about the destruction of historic properties. Only that “without a local historic district, our village center could be lost forever through future demolitions and alterations.” (emphasis added)

We don’t learn how many historical structures have been destroyed or altered in the past 10, 20, or 30 years, but the direct implication is that they are threatened. Even the website to which one is directed later contains zero data about the destruction of historic properties. Only that “without a local historic district, our village center could be lost forever through future demolitions and alterations.” (emphasis added)

So is this a solution looking for a problem?

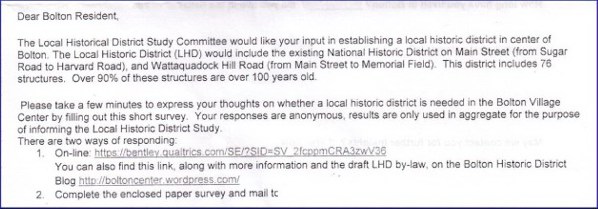

The introduction below the fold — the sheet was folded in thirds for mailing purposes, avoiding the need for an envelope — says, the “Committee would like your input in establishing a local historic district… Please take a few minutes to express your thoughts on whether a local historic district is needed…” I will show you that the survey is not designed to capture input but rather to drive opinion.

To take the survey, you have two choices. First, you can type in a 55-character (sic) web address, which includes an underscore covered by an underline. This would challenge anyone who is not web savvy. But you can also find the link at a shorter web address that contains more information about the committee’s work. Could that information bias the respondent who is about to take the survey?

The web site also includes the latest draft of the proposed by-law, draft #7. That raises an interesting question.

The web site also includes the latest draft of the proposed by-law, draft #7. That raises an interesting question.

The Study Committee is on its seventh draft of a by-law to be presented to the town meeting in 3 ½ months in May, with the formal review cycle among town committees about to start for any proposed law. Why is the committee collecting town-wide input now? While they have held many open meetings, the survey purports to be an opportunity for the general citizenry to provide input. If true, the survey should have been done months ago when different alternatives methods for historic preservation might have, or should have, been considered.

Second, you can complete the paper survey on the back side of the mailing, and mail it to the committee chairperson. No envelope or postage are provided.

The question to ask as a survey professional is whether the administration method(s) is going to lead to an unbiased response group. The administration method here clearly presents hurdles to completion, meaning those with strong feelings are more likely to complete the survey. If true, the survey administration method is creating a non-response bias. This bias occurs when those who respond to a survey are likely different from the entire group of interest.

The survey method and the introduction also raise the question of the unit of analysis for this survey. Each household (I presume) received one copy. Yet, at our town meeting in May we don’t vote by household; we vote by individual. This is a common problem with no clean solution. I encounter this with business-to-business surveys where the company being surveyed has multiple people who interact with the company conducting the survey. Is the relationship being surveying at the business level or at the individual level? We can make strong arguments both ways as is the case with this LHD survey. I would have included in the instructions a comment to make additional copies for each member of the household who is a registered voter. But these issues with the survey administration are minor compared to issues with the survey instrument.

~ ~ ~

So let’s look at this survey instrument.

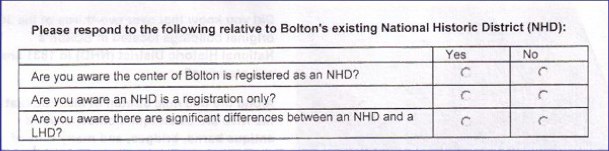

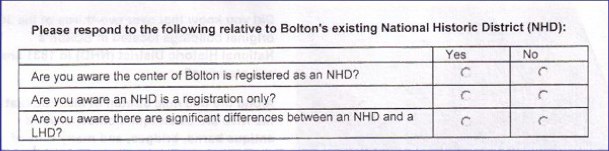

The first three questions are awareness questions that use a binary yes/no scale. Here that may be appropriate because you either are aware of these things or not. You probably can’t have some limited degree of awareness. The designer also uses the NHD and LHD acronyms here and throughout the survey. NHD is defined at the top of the survey, but LHD is only defined in the introduction. Use of acronyms in surveys is dangerous. If the respondents confuse the meaning of the acronyms, then how valid are the data generated? I avoid acronyms in my survey design work for this reason.

The first three questions are awareness questions that use a binary yes/no scale. Here that may be appropriate because you either are aware of these things or not. You probably can’t have some limited degree of awareness. The designer also uses the NHD and LHD acronyms here and throughout the survey. NHD is defined at the top of the survey, but LHD is only defined in the introduction. Use of acronyms in surveys is dangerous. If the respondents confuse the meaning of the acronyms, then how valid are the data generated? I avoid acronyms in my survey design work for this reason.

Further, the second question asks, “Are you aware an NHD is a registration only?” What’s “registration only” mean? It’s very poorly worded shorthand phrasing that creates serious ambiguity for the respondent.

But what’s the purpose behind these awareness questions? My educated guess is that the question is actually meant to educate the respondent, to make known to them that houses in a National Historic District are simply registered. No restrictions on what is done with the property are part of the NHD listing. That “shortcoming” is, in fact, the goal of creating the LHD (Local Historic District).

In other words, the purpose of the question is not to measure awareness but to make people aware of the distinction between NHD and LHD. As we will see, much of this survey’s purpose is to affect the thinking of the respondent, not to allow the respondent to “express [their] thoughts.” In a broad sense that makes this survey an example of push polling.

Push polling is a practice that has recently become part of the political landscape. In a push poll, telephone calls are made before an election purporting to be a survey about the election. But the questions are all designed to impart information, typically highly negative, about one candidate. An example might be, “How aware are you that Mr. Candidate was arrested three times on drunk driving charges?”

If one were designing a survey to counter this survey, a push polling question could be, “Are you aware that the US Constitution provides specific protection for private property rights but makes no mention of collective property rights?”

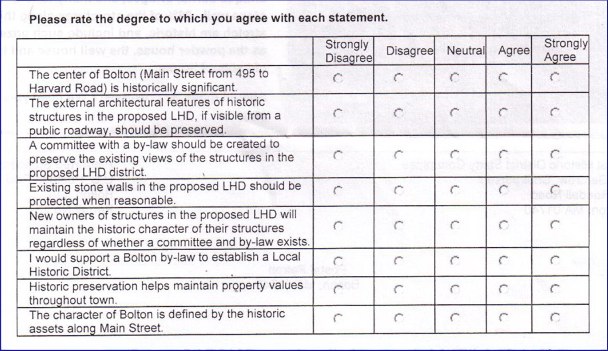

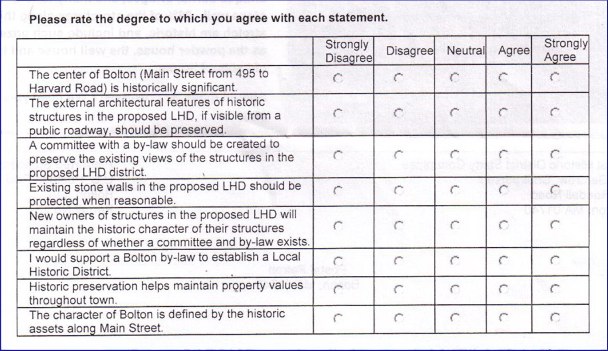

Now we encounter eight interval-rating questions, asking respondents their strength of agreement with these eight statements. Just in case someone misses the point, the first and last questions measure the same attribute — Importance of old homes to Bolton’s character.

Now we encounter eight interval-rating questions, asking respondents their strength of agreement with these eight statements. Just in case someone misses the point, the first and last questions measure the same attribute — Importance of old homes to Bolton’s character.

Most of these statements are hard to disagree with. Yes, the town center is historically significant. Yes, architectural features and stone walls should be preserved. Yes, historic preservation affects property values. Who would disagree with those? Not me. They’re motherhood and apple pie. I will be stunned if 80% of respondents don’t Agree or Strongly Agree with those statements. That’s the evidence that the Study Committee wants to show an LHD is needed and wanted by the citizenry.

Perhaps more importantly, these questions get the respondent into a pattern of agreeing with the statements presented. Don’t discount that effect. That’s one of the validity shortcomings of this question type, known as Likert-type questions. Most of us like to be agreeable, and we can get put into that routine by asking a series of questions to which we will agree. Once into that rhythm, we’re asked whether a town committee should be appointed and empowered to impose what we just agreed should happen. The flow makes it easy now to agree with that.

Notice the language used in the questions. The language chosen conditions the respondent to view historic homes as an asset of the town collectively. Yet, you might say, aren’t these homes privately owned? But if the views of the historic homes are a collective right, then conflicts between these rights will inevitably exist. These contested rights between private property rights and collective rights must be adjudicated by government, in this case a committee established by law to control what owners do with their property in order to preserve the collective right. Pretty slick reasoning, isn’t it? And all an outcome of the survey design.

From a questionnaire design perspective I did find the fifth question puzzlingly — “New owners of structures in the proposed LHD will maintain the historic character of their structures regardless of whether a committee and by-law exists.” Clearly, the desired response by the study’s author is Strongly Disagree. If owners were going to maintain historical structures properly, then the issue of an LHD is moot.

A question structured like this is normally known as a reverse coded question. Questionnaire designers put one or two reverse-coded questions early in a survey to make sure the respondent doesn’t get into a response routine just giving the same answer without reading the question. I applaud the apparent attempt to establish questionnaire validity. However, this question is positioned too late in the survey to serve that purpose. The respondent’s response pattern has already been established. Further, I suspect many people, to the chagrin of the study’s author, will check Strongly Agree because they’re in the agreeable rhythm.

Routine occurs when the respondent gives the same response to every question. Once into the response rhythm, the respondent likely doesn’t read and consider the questions fully. This effect compromises instrument validity since the respondent isn’t really answering the questions.

Putting aside the question structure and location, notice the intent of the question. The question presumes that it is the responsibility of new home owners to maintain their houses’ features, thus reinforcing the collective asset argument. The implicit message is that newcomers to town won’t understand that the home they just bought provides benefits to non-owners, so we need to control what they do to preserve the collective property right.

Why didn’t they ask about current home owners in the proposed district? That would have personalized the impact of the by-law to current residents, but that may have antagonized people who will cast votes in the May town meeting.

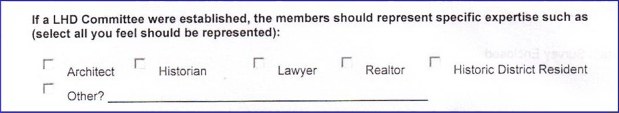

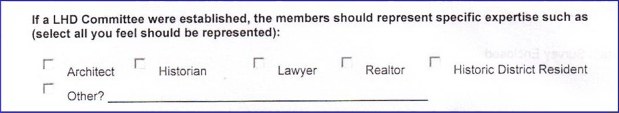

The last closed-ended question asks what specific expertise should be on an LHD committee. It’s well-known that respondents gravitate toward the first option provided, which is Architect. The last option is Historic District Resident. This structure is already baked into the proposed bylaw on page 2. What they omit as an option is Study Committee Member, which the proposed by-law includes as a member of the LHD committee. I’ll note here, if I have properly ascertained the addresses of the five members of the Study Committee, only one owns a home that would affected by the proposed by-law. I did chuckle a bit at the inclusion of Lawyer as a potential member of the LHD committee. The Study Committee apparently sees the potential impact to the town’s litigation budget this by-law could bring to the town.

The last closed-ended question asks what specific expertise should be on an LHD committee. It’s well-known that respondents gravitate toward the first option provided, which is Architect. The last option is Historic District Resident. This structure is already baked into the proposed bylaw on page 2. What they omit as an option is Study Committee Member, which the proposed by-law includes as a member of the LHD committee. I’ll note here, if I have properly ascertained the addresses of the five members of the Study Committee, only one owns a home that would affected by the proposed by-law. I did chuckle a bit at the inclusion of Lawyer as a potential member of the LHD committee. The Study Committee apparently sees the potential impact to the town’s litigation budget this by-law could bring to the town.

~ ~ ~

The above points make readily clear that the survey is designed to generate data to support a position — that historic preservation is desirable and the only means to do so is through the creation of a town committee with force of law to impose their decisions.

If this were an objective survey — truly a study — of how best to preserve historical structures and how to address contestable rights, we would see other questions such as:

- “Maintenance of all homes in the town helps maintain property values throughout the town.” (This would provide analytical contrast with the penultimate internal-rating question.)

- “Private property rights should be greatly respected when considering the various elements that may comprise an LHD by-law.” (Note that the survey never asks anything about private property rights, only collective property rights. This omission is stunning given that the fundamental purpose of an LHD is to restrict private property rights.)

- “Property owners whose property rights are restricted by an LHD should be compensated by lower property tax rates, shifting the tax burden onto those who enjoy the benefits of these older homes without paying the cost of ownership or maintenance of them.” (In other words, if you believe in collective property rights, put your money where your mouth is.)

- “Rather than restrict what property owners can do with their historical property, the town should provide incentives to encourage preservation.” (Would a carrot-and-stick approach instead of a command-and-control approach be preferable, especially since there is no evidence of an imminent threat to historic structure? The proposed by-law is all stick and no carrot. Note that the by-law draft currently has a $300 per day fine, and no provisions for expedited emergency repairs.)

Inclusion of these types of questions would be fair and balanced. Why not pose questions about which approach to historic preservation is more preferred? The reason is clear. Such questions might provide data that conflict with the objectives of this Study Committee.

Let’s be honest. The Committee’s objective is to implement a command-and-control system over what people who own homes in the proposed district can do with those homes. The survey’s purpose is to manufacture data to marshal a call for collective action to control private property. Those are words that command-and-controllers don’t like to use. “Preserve historic assets” sounds so much more benign and beneficial.

In most all public policy matters there are pros and cons, benefits and costs that must be weighed. To inform the public decision requires capturing information on both sides of the trade-off. The survey provides no data to elucidate such trade-off decisions. It wasn’t designed to do so.

~ ~ ~

As a history major, I also know that history is not stagnant. The houses built today will be historical structures in 100 years. Shouldn’t we preserve these assets as well whether in the proposed district or not? Why isn’t that proposed? Because it’s easier to enact laws that initially control the behavior of a few. Fewer people affected mean fewer people to react against being controlled by the collective entity. Note that no questions were asked about the preservation predispositions of current historic home owners. It’s a free pass to vote for the law if you’re not affected. No skin off my back — and I benefit. What’s not to love? Of course, with the precedent set, the next law may seek to control your property.

~ ~ ~

When reading this article, you have no doubt discerned my views on the topic. I promised to explain. You may have guessed I own an historic home. I bought my Federalist period home in Bolton 30 years ago. The original part of the house dates back to 1804. My home is not in the proposed Local Historic District, but experience shows that when command-and-control legislation is implemented, the tendency is for it to expand not contract. In my neighborhood we have about a half dozen old homes, all being maintained and improved by their owners without the benefit of those who claim to know what’s in our best interests better than we do, as is the case with the homes in the proposed historic district.

When reading this article, you have no doubt discerned my views on the topic. I promised to explain. You may have guessed I own an historic home. I bought my Federalist period home in Bolton 30 years ago. The original part of the house dates back to 1804. My home is not in the proposed Local Historic District, but experience shows that when command-and-control legislation is implemented, the tendency is for it to expand not contract. In my neighborhood we have about a half dozen old homes, all being maintained and improved by their owners without the benefit of those who claim to know what’s in our best interests better than we do, as is the case with the homes in the proposed historic district.

I bought an old house because I love old houses and I wanted a house I could renovate. My childhood home was a Royal Barry Wills cape. In my 30 years of home ownership, I have renovated most all of my house, doing much of the work with my own two hands, being very sensitive to its history. While certainly updating it, I have preserved and used materials from the house wherever practical — and some where it wasn’t practical.

I have tried to make the home more handsome, and I think you would find few disagreements from my neighbors. (See nearby photos.) I didn’t need anyone to tell me what I should or shouldn’t do with my property. In fact, had my home been under the proposed law’s jurisdiction, I would have needed permission to replace the asphalt shingles on the face of the house with clapboards! Would I have bought the house if I had to run the phalanx of an appointed commission and possibly “any charitable corporation in which one of its purposes is the preservation of historic places, structures, buildings or districts,” which is included in the definition of Aggrieved Person in the proposed by-law? Probably not.

I have tried to make the home more handsome, and I think you would find few disagreements from my neighbors. (See nearby photos.) I didn’t need anyone to tell me what I should or shouldn’t do with my property. In fact, had my home been under the proposed law’s jurisdiction, I would have needed permission to replace the asphalt shingles on the face of the house with clapboards! Would I have bought the house if I had to run the phalanx of an appointed commission and possibly “any charitable corporation in which one of its purposes is the preservation of historic places, structures, buildings or districts,” which is included in the definition of Aggrieved Person in the proposed by-law? Probably not.

I am concerned about the preservation of historic homes.

So, I did something novel.

I bought one. What a concept!

~ ~ ~

To close, I will admit that I considered completing the survey providing answers that I knew the Study Committee would not want to see. However, that would just make me equal in intellectual dishonesty as those who designed a data collection instrument whose data will undoubtedly be presented as an unbiased view of the thoughts of the town’s citizens.

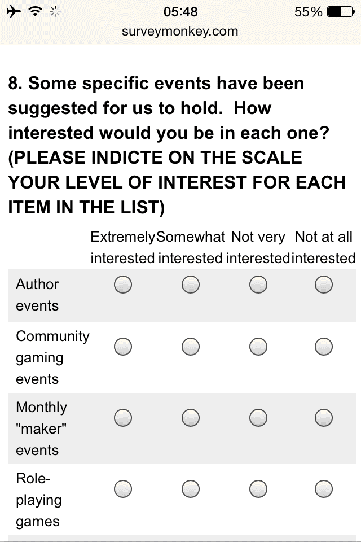

The issue is: how does your survey render onto a smartphone? Website designers have tackled this with responsive website design, and the same issues apply for surveyors. But the issue is perhaps more critical for surveyors. While the webforms might be responsive to the size of the screen on which the survey will be displayed, the fact is some survey questions simply will not display well on a small screen.

The issue is: how does your survey render onto a smartphone? Website designers have tackled this with responsive website design, and the same issues apply for surveyors. But the issue is perhaps more critical for surveyors. While the webforms might be responsive to the size of the screen on which the survey will be displayed, the fact is some survey questions simply will not display well on a small screen.

We don’t learn how many historical structures have been destroyed or altered in the past 10, 20, or 30 years, but the direct implication is that they are threatened. Even the website to which one is directed later contains zero data about the destruction of historic properties. Only that “without a local historic district, our village center could be lost forever through future demolitions and alterations.” (emphasis added)

We don’t learn how many historical structures have been destroyed or altered in the past 10, 20, or 30 years, but the direct implication is that they are threatened. Even the website to which one is directed later contains zero data about the destruction of historic properties. Only that “without a local historic district, our village center could be lost forever through future demolitions and alterations.” (emphasis added) The web site also includes the latest draft of the proposed by-law, draft #7. That raises an interesting question.

The web site also includes the latest draft of the proposed by-law, draft #7. That raises an interesting question. The first three questions are awareness questions that use a binary yes/no scale. Here that may be appropriate because you either are aware of these things or not. You probably can’t have some limited degree of awareness. The designer also uses the NHD and LHD acronyms here and throughout the survey. NHD is defined at the top of the survey, but LHD is only defined in the introduction. Use of acronyms in surveys is dangerous. If the respondents confuse the meaning of the acronyms, then how valid are the data generated? I avoid acronyms in my survey design work for this reason.

The first three questions are awareness questions that use a binary yes/no scale. Here that may be appropriate because you either are aware of these things or not. You probably can’t have some limited degree of awareness. The designer also uses the NHD and LHD acronyms here and throughout the survey. NHD is defined at the top of the survey, but LHD is only defined in the introduction. Use of acronyms in surveys is dangerous. If the respondents confuse the meaning of the acronyms, then how valid are the data generated? I avoid acronyms in my survey design work for this reason. Now we encounter eight interval-rating questions, asking respondents their strength of agreement with these eight statements. Just in case someone misses the point, the first and last questions measure the same attribute — Importance of old homes to Bolton’s character.

Now we encounter eight interval-rating questions, asking respondents their strength of agreement with these eight statements. Just in case someone misses the point, the first and last questions measure the same attribute — Importance of old homes to Bolton’s character. The last closed-ended question asks what specific expertise should be on an LHD committee. It’s well-known that respondents gravitate toward the first option provided, which is Architect. The last option is Historic District Resident. This structure is already baked into the proposed

The last closed-ended question asks what specific expertise should be on an LHD committee. It’s well-known that respondents gravitate toward the first option provided, which is Architect. The last option is Historic District Resident. This structure is already baked into the proposed  When reading this article, you have no doubt discerned my views on the topic. I promised to explain. You may have guessed I own an historic home. I bought my

When reading this article, you have no doubt discerned my views on the topic. I promised to explain. You may have guessed I own an historic home. I bought my  I have tried to make the home more handsome, and I think you would find few disagreements from my neighbors. (See nearby photos.) I didn’t need anyone to tell me what I should or shouldn’t do with my property. In fact, had my home been under the proposed law’s jurisdiction, I would have needed permission to replace the asphalt shingles on the face of the house with clapboards! Would I have bought the house if I had to run the phalanx of an appointed commission and possibly “any charitable corporation in which one of its purposes is the preservation of historic places, structures, buildings or districts,” which is included in the definition of Aggrieved Person in the proposed by-law? Probably not.

I have tried to make the home more handsome, and I think you would find few disagreements from my neighbors. (See nearby photos.) I didn’t need anyone to tell me what I should or shouldn’t do with my property. In fact, had my home been under the proposed law’s jurisdiction, I would have needed permission to replace the asphalt shingles on the face of the house with clapboards! Would I have bought the house if I had to run the phalanx of an appointed commission and possibly “any charitable corporation in which one of its purposes is the preservation of historic places, structures, buildings or districts,” which is included in the definition of Aggrieved Person in the proposed by-law? Probably not.