Survey Question Type Choice: Options

Once we have our list, how can we ask for prioritization? Here are our survey question choices.

- Multiple-Choice Checklist, Single Response Question Type

- Multiple-Choice Checklist, Multiple Response Question Type

- Forced Ranking Question Type

- Interval Rating Scale Question Type

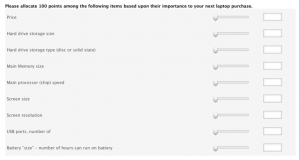

- Fixed Sum Question Type

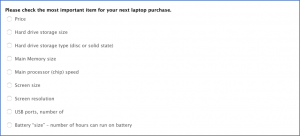

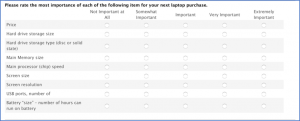

Seemingly, the most straightforward question type is seen in the nearby screen shot. Ask someone to choose the one most important — known as a Multiple-Choice, Single Response Checklist.

Our analysis will be the percent of respondents who selected each item.

Sounds clean and simple, but…

- We don’t learn what might be second in importance (or third). To give an extreme example, what if everyone viewed two of the items as first and second, respectively, in importance. You’d never learn that. In our laptop example, price is likely to be a first choice for lots of people. Knowing #2 would help our decision making.

- Choosing one answer may not be so simple for some people. What if someone views two items as of equal top importance? Now they’ll be agonizing over which to check. The question is not simple any longer.

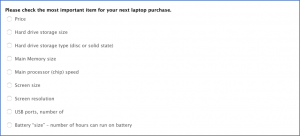

Multiple-Choice Checklist, Multiple Response Question Type

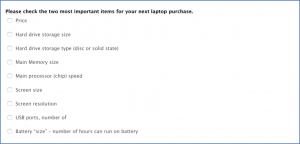

A quick change to the previous question is to let the respondent choose more than one answer. (Notice that the radio buttons, which signify “check only one” are now checkboxes, which signify “check all that apply.”) It’s a Multiple-Choice, Multiple Response Checklist.

But we probably don’t what someone to check ALL that apply. If people check all of them, then we haven’t learned what’s truly important. Instead, instruct the respondent to check the two (or three, depending on the length of the list) most important. Now we’re getting the top two in importance. That likely provides better data for decision making. (Do note – and tell your readers – that the percentages will now add to 200%.) And the respondent burden is quite low.

Many survey tools provide edit capabilities for multiple response checklist questions, perhaps in a more expensive subscription. If so, you could instruct people to check up to two items or exactly two items. If someone checked three items or, in the second case, fewer than two, they’d get an error message.

Many software tools also support a follow up question for the multiple-choice checklist that would ask, “Of the two you selected, which is most important?” You would then have the #1 & #2 importance items, which leads us to forced ranking survey question type.

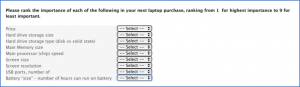

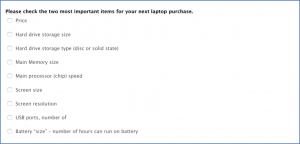

We’ve all seen these questions as in the nearby example. Forced ranking questions

are a favorite approach since they appear to provide prioritized information, but I caution you about using them. Why?

- Analytical Burden. In the example here with 9 items, we’d get a 9×9 matrix of data – the percent of respondents who selected each item for each ranking point. Are you going to give your manager a chart with 81 data points – probably a stacked bar chart? Of course not! You’ll summarize the data, probably showing the percent of respondents who chose each item as #1 in importance and as #1 or #2. That’s the useful information – and it’s almost exactly what the multiple-choice checklist option above provides.

(Note: while you might have data coded into your spreadsheet as numbers, you cannot legitimately take means of data from a forced ranking question. It’s ordinal data, not interval data. A companion article discusses how survey question type determines the data type generated, which then determines analytical options.)

- Respondent Burden. If our analysis will focus on the top rankings, why ask people to rank order anything beyond the top ranks? You’re asking people to do a lot of work for no benefit. And it is considerable work – respondent burden – that may lead to an item non-response or an exit from the survey in total. Worse, they may just click on buttons to get through the question giving you garbage data. How much thought would you put into properly ranking 9 items? Exactly!

- Respondent Annoyance. Software designers have devised several ways to present the forced ranking question to the respondent. They all stink to some degree, some horribly so. In the nearby example, you have to select ranks using drop down boxes, which are low in usability. Further, if you select the same rank more than once, you get an error message. The annoyance factor, especially if it’s a required answer, may lead to survey abandonment.

Friends don’t let friends — or clients — use forced ranking questions.

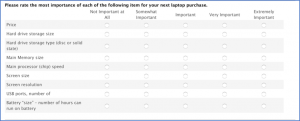

Presenting each item to the respondent asking for a response on an interval rating scale is perhaps the most common way to get data on importance. It’s a nice clean way to get data with fairly low respondent burden, and we can calculate mean scores since the data are – hopefully – interval in nature.

However, the approach has one serious drawback similar to the “Check all that apply” in the checklist approach. Nothing stops the respondent from giving the same rating to every item. What have you learned if every item is rated the same? Nothing!

We really want the respondent to engage in trade-off analysis, and interval rating questions don’t support that goal. While rating questions are perhaps the most common way to measure importance, it is arguably the least useful.

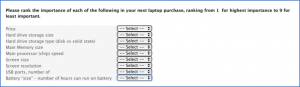

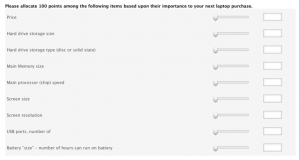

The checklist version asked people to select the top item(s) in importance. In the forced ranking version, we ask people to put the items in rank order. What if instead we asked the respondents to assign points to each item based on importance? Now we have a fixed-sum question. (This goes by different names such as constant sum or fixed allocation.)

We give people, say, 100 points and ask them to assign them to the various items. The scores must add to the 100. More points mean more importance. Our analysis focuses on the mean score for each item (since this is interval data) to see relative importance. That’s a real plus for this approach.

Obviously, the question type has downsides and design concerns:

- It is considerable work for the respondent and some people will want to skip it – similar to the forced ranking.

- Choose a survey tool that presents a running total to the respondent as they complete the question. Not all tools do that! The one shown here uses sliders to select the points, which are shown in the box to the right. Once you hit 100, you can’t slide for more points, but the point total is not provided.

- It’s best to have the number of items divide evenly into the total points to simplify the math for the respondents. With 9 items – 9 items! – equal importance would be 11.11 points. So, if you absolutely must have 9 items, make the point total 90.

- Don’t allow entering negative numbers! Yes, I’ve seen respondents do that.

- Don’t use a lot of these questions in a survey. That will drive people away.

- It’s best to put these question(s) toward the end of the survey once you’ve developed rapport with the respondent and they are fully engaged.

Conclusion

Measuring criticality or importance is a critical important aspect for many survey designers.

- Fixed sum questions will give you the most useful data for analysis, but the respondent burden may be a turnoff.

- Forced ranking questions are annoying at best and provide limited analytical options.

- The “select one” checklist seems simple and enticing, and may be fine in some circumstance.

- The “select top two” checklist type is one to consider. The question has limited respondent burden, the data will be readily understandable, and you may get more useful data beyond selecting just the top one.